iFood on Delivering 100 Million Events a Month to Restaurants with ScyllaDB Cloud

iFood is the largest Brazilian-based food delivery app company. It connects users, restaurants, and deliverymen using an event-driven architecture using AWS SQS and SNS, with programming in Java and Node.js. Thales' team is responsible for delivering orders' events to restaurant devices at least once, which is currently done using a REST API polling and acknowledgment system.

Learn how their database infrastructure evolved from a PostgreSQL database, but began to show limitations and was a single point of failure. Growing through a few intermediary steps, including Amazon DynamoDB, eventually, turning to ScyllaDB for its data model and collections to condense multiple tables. Using ScyllaDB, iFood reduced the time to process events and acknowledgments (from ~80ms to ~3ms) and reduced costs using ScyllaDB vs DynamoDB by over 9x.

In the above video, iFood’s Thales Biancala shared how his team used ScyllaDB to scale their system to meet that expanding demand. He explained how each order represents around five events in their database, producing well over 100,000,000 events on a monthly basis.

Built on AWS

All of iFood’s infrastructure is in AWS, and they use both Amazon Simple Notification Service (SNS) and Amazon Simple Queue Service (SQS) to deliver those events. Their services combine the use of Java, Node.js, Docker, and Kubernetes. For relational databases, they use PostgreSQL. For NoSQL, they are using Amazon DynamoDB and now ScyllaDB as well.

Thales’ team is responsible for delivering those events to the restaurants via the iFood platform. SNS and SQS allow them to more easily connect with their partners, who are not always the most tech-savvy. “Also, in Brazil there are a lot of places that don’t have a good Internet connection, so a persistent connection is not always the best approach.” That is why they rely on an HTTP delivery service. He described their polling service, which fires off every 30 seconds for each device. Each of those polls invoke a database query. Their mid-term goal was to scale to support 500,000 connected merchants with 1 device each.

They run multiple polling systems in parallel, from the Amazon SNS/SQS proxy service that is doing Kitchen-Polling, to a Connection-Order-Events service based on Apache Ignite, and the Connection-Polling service based on ScyllaDB.

iFood had been using DynamoDB for Connection-Polling, but chose to move that to ScyllaDB instead. An earlier gateway-core service based on PostgreSQL was already decommissioned.

Evolving from SQL to NoSQL

Thales described the legacy PostgreSQL service. Events were indexed in one table and acknowledgements in another. But doing reads required JOINs, which became a performance problem as the number of events and merchants continued to increase. After they hit 10 million orders a month they wanted to explore other solutions, as PostgreSQL proved to be a single point of failure that had, indeed, failed multiple times in the previous year.

They then went to an Apache Ignite service, which performed very well, with reads around 3 milliseconds. However, it proved hard to monitor with the service and the database on the same machine. Also, since Ignite is an in-memory database they still needed to maintain a second database (PostgreSQL) for when they needed to scale up, and to recover from disasters. “To fill up this cache if there is some sort of disaster takes us up to twenty minutes. You can imagine what twenty minutes is during dinner time. It’s a lot of money.”

This is what led them to work on their NoSQL approach. Their main query was to discover all events that were not acknowledged by the device. Their base assumption is that orders belong to merchants, not devices. So they need to know which devices belong to the merchant (the restaurant). When there are new devices, they need to be able to return to them all merchant events from the past eight hours. (History and analysis is done in another part of their platform.)

With that, they arrived at this NoSQL model written in JSON:

//UnackedDeviceEvents

{

DeviceID, // primary-key

EventID, // sort-key

Payload,

Expiration

}

// RestaurantDevices

{

RestaurantId, // primary-key

DeviceId, // sort-key and secondary index

Expiration

}

// RestaurantEvents

{

RestaurantId, // primary-key

EventId, // sort-key

Payload,

Expiration

}

Events initially are indexed in the UnackedDeviceEvents table. Whenever events are acknowledged, they are removed from that table. RestaurantDevices is used when introducing a new device to the platform to relate the restaurant and the devices; the DeviceId is used as a secondary index, to get all the restaurants for that device.

This schema was first used with the Amazon DynamoDB NoSQL database for iFood’s Connection-Polling service. They had all their infrastructure in AWS already, and were eager to use a fully managed solution to help them scale.

However, they quickly discovered that DynamoDB’s autoscaling was not fast enough for their use case. As you can imagine, iFood has highly bursty traffic, with relatively few orders in the morning or mid-afternoon, but large numbers of orders at lunchtime and dinner. Slow autoscaling meant that they could not meet those daily bursts of demand, unless they left a high minimum throughput (which was expensive) or managed scaling themselves. The latter case obviously defeated the purpose of a fully managed service.

In Thales’ opinion DynamoDB’s new on-demand mode was fine.”It’s what we’re using now, but it’s expensive.

Migrating from DynamoDB to ScyllaDB

This was when iFood began working on a second version of their Connection-Polling service, this time using ScyllaDB. To migrate from DynamoDB to ScyllaDB was quite easy, using the same modeling. Here’s the same schema as above rendered in Cassandra Query Language (CQL):

CREATE TABLE unacked_device_events

(

deviceId UUID,

eventId UUID,

payload TEXT,

PRIMARY KEY (deviceId, eventId)

);

CREATE TABLE restaurant_devices

(

restaurantId TEXT,

deviceId UUID,

PRIMARY KEY (restaurantId, deviceId)

);

CREATE INDEX ON restaurant_devices(deviceId);

CREATE TABLE restaurant_events

(

restaurantId TEXT,

eventId UUID,

payload TEXT,

PRIMARY KEY (restaurantId, eventId)

);

Even though DynamoDB uses a document-based JSON notation, and ScyllaDB used the SQL-like CQL, they could use the same query strategy across both.

Thales anticipated migrating using ScyllaDB’s Project Alternator DynamoDB-compatible API would be even easier, since it would allow iFood to keep their current JSON queries.

iFood’s initial deployment for ScyllaDB was on 3x c5.2xlarge machines. Easily meeting their throughput requirements, this reduced their database expenses from $4,500 a month ($54k annually) to $500 a month ($6,000 annually) — a 9X savings. This was just for one service. iFood anticipates that they can apply similar savings across many of the services they run.

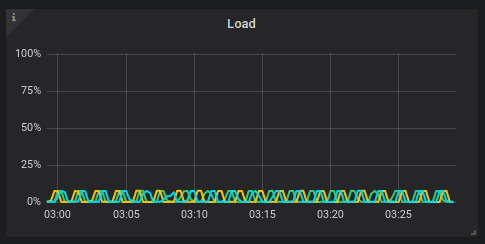

The load on iFood’s service running on ScyllaDB with just 3x c5.2xlarge servers

meant they had plenty of room to scale, and were saving 9x over DynamoDB.

In terms of support, Thales noted “It’s really nice to know both, that it’s open source and we can see what’s happening, and that there is someone there that is working with us.”

One learning they came across in their conversion was that ScyllaDB uses TTL by column, whereas DynamoDB expiration times are set by document.

iFood also needs to manage restaurant devices. And it was proving to be taxing on the system to add new devices in the middle of the day. So they began a new service using ScyllaDB collections. The drawback with this was that reading times were expected to be slower, but this was acceptable because they wanted high availability for this use case. The other advantages of this approach were that it was less complex than how they had been managing it, and they were able to use these events tables to populate their Apache Ignite cache.

CREATE TABLE events

(

eventId UUID,

restaurantId INT,

payload TEXT,

ackeddevices SET<TEXT>,

PRIMARY KEY(eventId)

);

CREATE INDEX ON events(restaurantId);At first, they had created these insert statements:

'INSERT INTO events (eventId, restaurantId, payload) VALUES (?.?.?) using TTL ?'; 'UPDATE events SET ackeddevices = ackeddevices = ? WHERE eventId=?';

However, there was an error that they learned the hard way, as Thales explained. TTL works in ScyllaDB on each column by itself. So eventId, restaurantId, and payload each have their own TTL. So instead they had to add a SELECT TTL statement:

'INSERT INTO events (eventId, restaurantId, payload) VALUES (?.?.?) using TTL ?'; 'SELECT TTL(payload) FROM events WHERE eventId=?'; 'UPDATE events SET ackeddevices = ackeddevices = ? WHERE eventId=?';

The result of adding this service was noticeable. As stated above, provisioning this with ScyllaDB was one-ninth the expense of DynamoDB. But also, the time to handle index events went from ~80ms with DynamoDB to ~3ms with ScyllaDB. That meant one-eighth the infrastructure usage for writes. The solution further reduced complexity for iFood, resulting in 40% less code.

There was an increase in read times, though they were still acceptable. Also, Thales noted collections updates are CPU intensive and generate tombstones, so he cautioned others to use them carefully.

Final Thoughts

ScyllaDB was far less expensive than DynamoDB, but you may have to take into account managing a cluster. Thales noted, however, that managing the cluster at iFood was relatively straightforward and he had no problems managing it as a developer. He also noted you can use the new ScyllaDB Cloud (NoSQL DBaaS), a fully-managed version of ScyllaDB.

He advised developers to get to know all of the features of your database before using them — “Collections updates are not cheap!” Each update incurs a tombstone which can slow down reads, and also requires more work on garbage collection. Thales noted he was still toying with gc_grace to improve performance.

Thales further noted ScyllaDB’s secondary indexes are global by default, which is a good fit for his second use case, where the index has as high cardinality as the number of merchants (over 100,000 at that time). But ScyllaDB also offers local secondary indexes, “and you need to know when to use each.”