Stadia Maps: Using ScyllaDB to Serve Maps in Milliseconds

Ian Wagner and Luke Seelenbinder were consulting with a perfectionist client who demanded an aesthetically beautiful map for their website. It also needed to render lightning fast and be easy to configure. On top of that Ian and Luke saw how even small business clients like theirs could spend as much as $700 a month for existing services from the global mapping giants. To address these deficiencies they founded Stadia Maps with the goal to drive down prices using open mapping programs, combined with a far more satisfying look-and-feel and an overall better user experience.

Having accomplished what they set out to do after launching in 2017, their growth and success led to a new requirement: the ability to scale their systems to deliver maps on a global basis. The maps they generate come from a PostGIS service using OpenStreetMap geographical data. This system produces map tiles, comprised of either line-based vector map data, known as Mapbox Vector Tiles (MVTs), or alternatively, a bitmap rendering of that same area.

To scale this service globally, Stadia Maps does not serve all of these maps directly out of their PostGIS service. Instead they have a pipeline, with PostGIS providing these map tiles to Scylla. Scylla globally distributes these tiles and serves them out for rendering on the client.

How many tiles does it take to map the world?

This all depends on the zoom level you need to support. Zoom level 0 is a map of the entire world on a single map tile. Each level of zoom expands the number of tiles by an exponent of four. So the first zoom level has four tiles, the second sixteen, and so on. Thus, the nineteenth level of zoom, Z19, would be the equivalent of 419 tiles (274.8 billion) tiles.

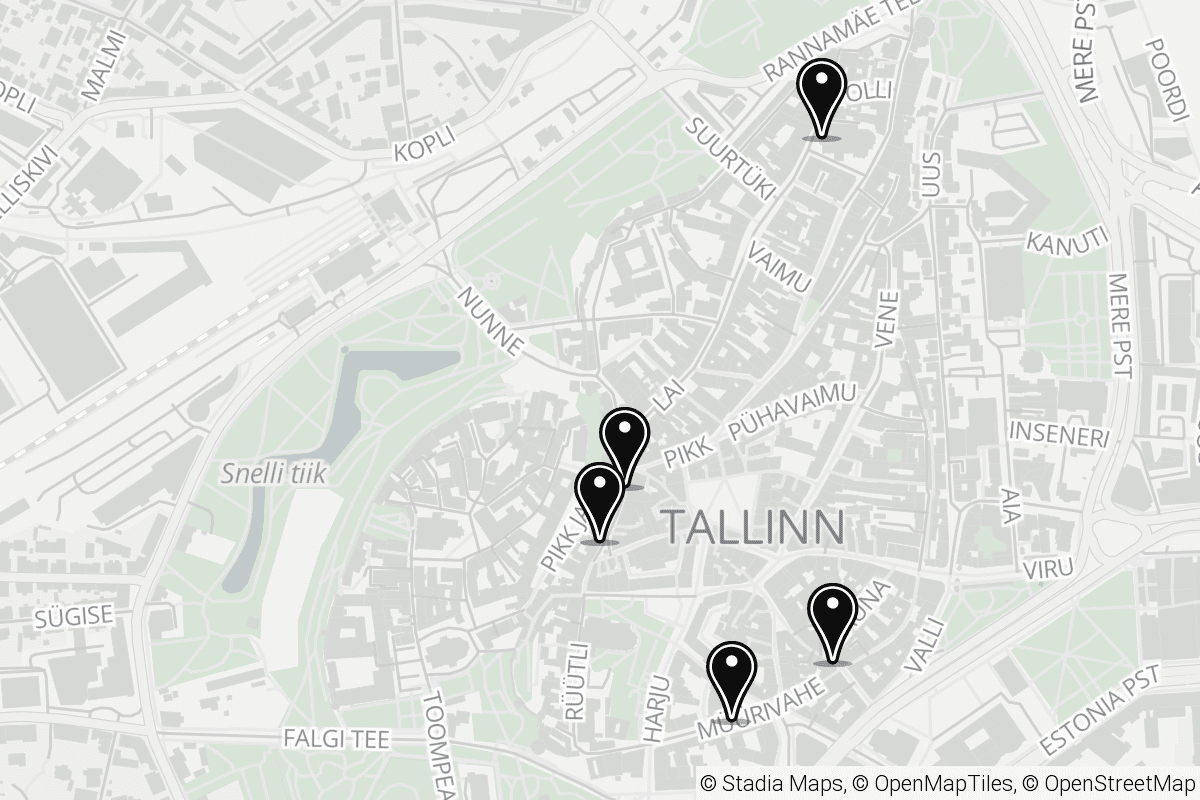

It is impractical and inefficient to pre-render that many tiles, especially considering that 70% of the planet’s surface is water (making those tiles merely the same uniform blue). Instead, Stadia Maps pre-renders tiles to the Z14 scale — the equivalent of about a 1:35,000 scale map, requiring 268 million tiles to map the entire world — and produces the rest as needed in an on-demand fashion.

An example of a Stadia Map for Tallinn, Estonia at the Z14 level of zoom.

If a map object is already stored in Scylla, it is served out of Scylla directly. In this case, Scylla is acting like a persistent cache. However, if the object is not already in Scylla, it will make a request to the PostGIS system to render the desired tile, and then store it for future queries.

Each of these tiles is optimized by being compressed and stored in Scylla. A vector-based MVT tile might be ~50kb of data uncompressed, but can typically be shrunk down to 10-20kb for storage. A plain water tile can be shrunk even more — to as little as 1kb.

Raster data (bitmapped images) take up more space — about 20-50kb per object. However, these are not cached indefinitely; Stadia Maps evicts them based on a Time-to-Live (TTL) to keep their total data size under management relatively steady.

Moving from CockroachDB to Scylla

When Stadia Maps first set out to deliver these map tiles at a global scale they began with the distributed SQL database CockroachDB. However, they realized their use case did not need its strong consistency model, and that Cockroach had no way to adjust consistency settings. So what is generally considered one of Cockroach’s strengths was, for Stadia Maps, actually a hindrance. Instead of strongly consistent data (which introduces latencies), users wanted the maps to be rendered fast. With long tail latencies of one second per tile CockroachDB’s behavior was simply too slow. Users were impatiently watching a map painted out before their eyes tile-by-tile in real time. That is, if it was served at all. Users were also getting hit with HTTP 503 timeout errors. Misbehaving Cockroach servers pushed to their limits were found to be the root cause of the problem.

Also, because most tiles were only requested once a month, or even once a year, Stadia Maps had a problem trying to implement a Least Recently Used (LRU) algorithm. For them, a Time To Live (TTL) was a more natural fit for data eviction.

This was when Stadia Maps decided to move to Scylla and its eventually consistent global distribution capabilities. For example a user planning a business trip to London from, say, Tokyo, would prefer that their map of London was served out close to them, from an Asian datacenter, for speed of response. If they had to fetch data about London from a UK-based server, that would necessitate long global round trips.

Long-tail latencies, known as p95 (and p99), are vitally important. This is the latency threshold below which 95% of the responses (or 99%) are made. Because many map tiles can be returned for even a single server query, mathematically it means there was a significant chance one or more tiles might appear late. As Luke notes, “If even one tile doesn’t load, that looks really bad.”

Stadia Maps’ end-to-end latency requirement is 250 ms for p95 — about the same as the blink of an eye. That includes everything, from making the request at a user’s browser, to acknowledging that request at the Stadia Maps API, processing the request, fetching the data from the database, then returning it and rendering it in the browser for the user. The database itself has only a fraction of that time to respond — less than 10 ms for the worst case.

To be able to meet this service level, Stadia Maps has three primary datacenters around the world. All data is replicated to all datacenters. However, the consistency of that data is minimal — a consistency level of 1 (CL=1). If a user is served up a stale tile it is usually only a few hours old. The likelihood of there being much change in those few hours since the last update is minimal.

If there isn’t a local copy of the tile in the local cluster, it will then try to fetch that tile if it exists in other Scylla clusters. If that fails, it will make the request to the local PostGIS service to render it. The results of which will then be put back into Scylla for future requests. In this latter case the responsiveness is still relatively slow, yet compared to CockroachDB p99 the response time for Scylla dropped by nearly half, to about 500 ms — well within timeout ranges.

Once the decision was made, Stadia Maps’ migration from CockroachDB to Scylla was swift. It only took one month from the first commit of code to the day Scylla was in production. Since moving to Scylla, according to Luke, “We have not found the limit” in regards to serving requests and throughput.

Working Side-by-Side

Scylla is a “greedy” system, generally requiring as much CPU and RAM as the server is capable of providing for fast performance. Yet Stadia Maps configured Scylla to set resource limits with the intention to deploy its map rendering servers side-by-side with Scylla on the same hardware. The map rendering engine requires a great deal of CPU and RAM, but not a lot of storage. Whereas Scylla requires a great deal of storage but in this case comparably little CPU and RAM. By configuring both systems optimally Stadia Maps gets the greatest utility out of their shared hardware resources.

Even now Stadia continues to optimize their deployment. Their application, written in Rust, is continually improved. “We keep trying to cut milliseconds all over the place,” Luke notes. The less cycles they need to steal from the raster renderer the better.

Discover Scylla for Yourself

Stadia Maps found Scylla in their research for NoSQL systems that could scale and perform in their demanding environment. We hope what you’ve read here will inspire you to try it out for your own applications and use cases. To get started, download Scylla today and join our open source community.