Top Mistakes with ScyllaDB: Configuration

Get an inside look at the most common ScyllaDB configuration mistakes – and how to avoid them.

In past blogs, we’ve already gone deep into the weeds of all the infrastructure components, their importance, and all the needed considerations one needs to take into account when selecting an appropriate ScyllaDB infrastructure. Now, let’s shift focus to an actual ScyllaDB deployment and understand some of the major mistakes my colleagues and I have seen in real-world deployments.

This is the third installment of this blog series. If you missed them, you may want to read back on the important infrastructure and storage considerations.

Also, you might want to look at this short video we created to assist you with defining your deployment type according to your requirements:

Now on to the configuration mistakes…

Running an Outdated ScyllaDB Release

Every once in a while, we see (and try to assist) users running on top of a version that’s no longer supported. Admittedly, ScyllaDB has a quick development cycle: the price paid to bring cutting-edge technology to our users – and it’s up to you to ensure that you’re running under a current version. Otherwise, you might miss important correctness, stability, and performance improvements that are unlikely to be backported down to your current release branch.

ScyllaDB ships with the scylla-housekeeping utility enabled by default. This lets you know whenever a new patch or major release comes out. For example, the following message will be printed to the system logs when running the latest major, but are behind a few patch releases:

# /opt/scylladb/scripts/scylla-housekeeping --uuid-file /var/lib/scylla-housekeeping/housekeeping.uuid --repo-files '/etc/apt/sources.list.d/scylla*.list' version --mode cr Your current Scylla release is 5.2.2 while the latest patch release is 5.2.9, update for the latest bug fixes and improvements

However, the following message shall be displayed when you are behind a major release:

# /opt/scylladb/scripts/scylla-housekeeping --uuid-file /var/lib/scylla-housekeeping/housekeeping.uuid --repo-files '/etc/apt/sources.list.d/scylla*.list' version --mode cr Your current Scylla release is 5.1.7, while the latest patch release is 5.1.18, and the latest minor release is 5.2.9 (recommended)

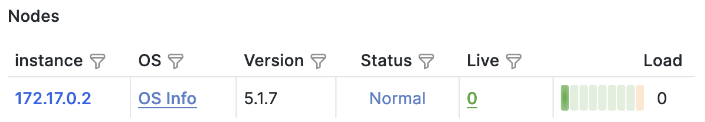

As you can see, there’s a clear distinction between major and patch (minor) releases. Similarly, whenever you deploy the ScyllaDB Monitoring Stack (more on that later), you’ll also have a clear view into which versions your nodes are currently running. This helps you determine when to start planning for an upgrade:

But when should you upgrade? That’s a fair question, which requires an explanation of how our release life cycle works.

Our release cycle begins on ScyllaDB Open Source (OSS), which brings production-ready features and improvements to our community. The OSS branch evolves and receives bug and stability fixes for a while, until it eventually gets branched to our next ScyllaDB Enterprise. From here, the Enterprise branch receives additional Enterprise-only features (such as Workload Prioritization, Encryption at Rest, Incremental Compaction Strategy, etc) and runs through a variety of extended tests, such as longevity, regression and performance tests, functionality testing, and so on. It continues to receive backports from its OSS sibling. As the Enterprise branch matures and passes through rigorous testing, it eventually becomes a ScyllaDB Enterprise release.

Another important aspect worth highlighting is the Open Source to Enterprise upgrade path. Notably, ScyllaDB Enterprise releases are supported for much longer than Open Source releases. We have previously blogged about the Enterprise life cycle. For Enterprise, it is worth highlighting that the versioning numbers (and their meaning) change slightly, although the overall idea remains.

Whether you are running ScyllaDB Enterprise or OSS, ScyllaDB releases are split into two categories:

- Major releases: These introduce new features, enhancements, newer functionality, all that’s good – really. Major releases are known by their two first digits, such as 2023.1 (for Enterprise) or 5.2 (for Open Source).

- Minor (patch) releases: Primarily contain bug and stability fixes, although sometimes we might introduce new functionality. A patch release is known by its last digits on the version number. For example, 5.1.18 indicates the eighteenth patch release on top of the 5.1 OSS branch.

ScyllaDB supports the two latest major releases. At the time of this writing, the latest ScyllaDB Open Source release is 5.2. Thus, we also support the 5.1 branch, but no release older than it. Therefore, it is important and recommended that you plan your upgrades in a timely manner in order to stay under a current version. Otherwise, if you report any problem, our response will likely begin with a request to upgrade.

If you’re upgrading from an Open Source version to a ScyllaDB Enterprise version, refer to our documentation for supported upgrade paths. For example, you may directly upgrade from ScyllaDB 5.2 to our latest ScyllaDB Enterprise 2023.1, but you can’t upgrade to an older Enterprise release.

Finally, let’s address a common question we often receive: “Can I directly upgrade from <super ancient release> to <fresh new good looking version>?” No, you can not. It is not that it won’t work (in fact, it might), but it is definitely not an upgrade path that we certify or test against. The proper upgrade involves running major upgrades one at a time, until you land on the target release you are after. This is yet another reason for you to do your due diligence and remain current.

Running a World Heritage OS

We get it, you love RHEL 7 or Ubuntu 18.04. We love them too! 🙂 However, it is time for us to let them go.

ScyllaDB’s close-to-the-hardware design greatly relies on kernel capabilities for performance. Over the years, we have seen performance problems arise from using outdated kernels. For example, back in 2016 we found out that the XFS support for AIO appending writes wasn’t great. More recently, we also reported a regression in AWS kernels with great potential to undermine performance.

The recommendation here is to ensure you run a reasonably recent Linux distribution rather than upgrading your OS every now and then. Even better, if you are running in the cloud, you may want to check out our ScyllaDB EC2, GCP and Azure images. ScyllaDB images go through rigorous testing to ensure that your ScyllaDB deployment will squeeze every last bit of performance from the VM you run it on top of.

The ScyllaDB Images often receive OS updates and we strive to keep them as current as possible. However, remember that once the image gets deployed it becomes your responsibility to ensure you keep the base OS updated. Although it is perfectly fine to simply upgrade ScyllaDB and remain under the same cloud image version you originally provisioned, it is worth emphasizing that – over time – the image will become outdated, up to the point where it may be easier to simply replace it with a fresh new one.

Going through the right (or wrong) way to keep your existing OS/cloud images updated is beyond the scope of this article. There are many variables and processes to account for. It is still worth highlighting the fact that we will handle it for you in ScyllaDB Cloud, which is a fully-managed database-as-a-service Here, all instances are frequently updated for both security and kernel improvements, and cloud images are frequently updated to bring you the latest in OSS technology.

Diverging Configuration

This is an interesting one, and you might be a victim of it. First, we need to explain what we mean by “diverging configuration”, and how it actually manifests itself.

In a ScyllaDB cluster, each node is independent from another. This means that a setting in one node won’t have any effect on other nodes until you apply the same configuration cluster-wide. Due to that, users sometimes end up with settings applied to only a few nodes out of their topology.

Under most circumstances, correcting the problem is fairly straightforward: Simply replicate the missing parameters to the corresponding nodes and perform a rolling restart (or SIGHUP the process, if the parameter happens to be LiveUpdatable parameter) accordingly.

In other situations, however, the problem may manifest itself in a seemingly silent way. Imagine the following hypothetical scenario:

- You were properly performing your due diligence and closely upgrading your 3-node ScyllaDB cluster for the past 2 or 3 years, just like we recommended earlier, and are now running the latest and greatest version of our database.

- Eventually one of your nodes died, and you decided to replace it. You spun up a new ScyllaDB AMI and started the node replacement procedure.

- Everything worked fine, until weeks later noticed that the node you replaced has a different shard count in Monitoring than the rest of the nodes. You double check everything, but can’t pinpont the problem.

What happened?

Short answer: You probably forgot to run scylla_setup during the upgrades carried out in Step 1, and you overlooked that its tuning logic changed between versions. When you replaced a node with an updated AMI, it automatically auto-tuned itself, resulting in the correct configuration.

There are plenty of other situations where you may end up with a similar misconfigured node, such as forgetting to update your ScyllaCluster deployment definitions (such as CPU and Memory) upon scaling up your Kubernetes instances.

The main takeaway here is to always keep a consistent configuration across your ScyllaDB cluster and implement mechanisms to ensure you re-run scylla_setup whenever you perform major upgrades. Granted, we don’t change the setup logic all that often. However, when we do, it is really important for you to pick up its changes because it may greatly improve your overall performance.

Not Configuring Proper Monitoring

The worst offense that can be done to any distributed system is neglecting the need to monitor it. Yet, it happens all too often.

By monitoring, we definitely don’t mean you should stare at the screen. Rather, we simply recommend that users deploy the ScyllaDB Monitoring Stack in order to have insights when things go wrong.

Note that we specifically mentioned using ScyllaDB Monitoring rather than other third party tools. The reasons are plenty:

- It is open and free to everyone

- It is built on top of well-known technologies like Grafana and Prometheus (VictoriaMetrics support was introduced in 4.2.0),

- Metrics and dashboards are updated regularly as we add more metrics

- It is extremely easy to upload Prometheus data to our support should you ever face any difficulties.

Of course, you can monitor ScyllaDB with other solutions. But if you eventually want assistance from ScyllaDB and you can’t provide meaningful metrics, this can impact our ability to assist.

If you have already deployed the ScyllaDB Monitoring, remember to also upgrade it on a regular basis to fully benefit from the additional functionality, security fixes, and other goodies it brings.

In summary, allow me to quote a presentation from Henrik Rexed from our last Performance Engineering Masterclass. The main Observability pillars to understand the behavior of a distributed system involve having readily access and visibility to: logs, events, metrics, and traces. Therefore, stop flying blind and just deploy our Monitoring stack 🙂

Ignoring Alerts

Here’s a funny story: Once we were doing our due diligence with one of our on-premise Enterprise users and realized one of their nodes was unreachable from their monitoring. We asked whether they were aware of any problems, nope. A bit more digging, and we realized the cluster was under that state for the past 2 weeks. Dang!

Fear not, the real story happened at an on-premise facility and it had a happy ending, with the root cause being identified as a network partition affecting the node.

Things like that really happen. While convention says that a database is pretty much a “set and forget it” thing, other infrastructure components aren’t, and you must be ready to react quickly when things go wrong.

Although alerts such as a node down or high disk space utilization are relatively easier to spot, others such as higher latencies and data imbalances become much harder unless you integrate your Monitoring with an alerting solution.

Fortunately, it’s quite simple. When you deploy ScyllaDB Monitoring, there are several built-in alerting conditions out-of-the-box. Just be sure to connect AlertManager with your favorite alerting mechanism, such as Slack, PagerDuty, or even e-mail.

No Automation

Unsurprisingly, most of the mistakes covered thus far could be avoided or addressed with automation.

Although ScyllaDB does not impose a particular automation solution on you (nor should we, as each organization has its own way of managing processes), we do provide Open Source tooling for you to work with so that you won’t have to start from scratch.

For example, the ScyllaDB Cloud Images support passing User-provided data during provisioning so you can easily integrate with your existing Terraform (OpenTofu anybody?) scripts.

Speaking of Terraform, you can rely on the ScyllaDB Cloud Terraform provider to manage most of the aspects related to your ScyllaDB Cloud provisioning. Not a Terraform user? No problem. Refer to the ScyllaDB Cloud API reference and start playing.

And what if you are not a ScyllaDB Cloud user and don’t use ScyllaDB Cloud images? We’ve still got you covered! You should definitely get started with our Ansible roles for managing, upgrading, and maintaining your ScyllaDB, Monitoring and Manager deployments.

Wrapping Up

This article covered most of the aspects and due diligence required for keeping a ScyllaDB cluster up to date, including examples of how remaining current may greatly boost your performance. We also covered the importance of observability in preventing problems and discussed several options for you to automate, orchestrate, and manage ScyllaDB.

The blog series up until now has primarily covered aspects tied to a ScyllaDB deployment. At this point – you should have a rock-solid ScyllaDB cluster running on adequate infrastructure.

Next, let’s now shift focus and discuss how to properly use what we’ve set up. We’ll cover application-specific topics, , such as load balancing policies, concurrency, consistency levels, timeout settings, idempotency, token and shard awareness, speculative executions, replication strategies … Well, you can see where this is going. See you there!