Benchmarking MongoDB vs ScyllaDB: Performance, Scalability & Cost

Read how MongoDB and ScyllaDB compare on throughput, latency, scalability, and price-performance in this third-party benchmark

To help the community better understand options and tradeoffs when it comes to selecting a high performance and scalable NoSQL database, BenchANT, an independent benchmarking firm, recently conducted an extensive benchmark comparing the well-known MongoDB Atlas with the rising ScyllaDB Cloud. This post is written by benchANT Co-Founder and CTO, Dr. Daniel Seybold.

NoSQL databases promise to provide high performance and horizontal scalability for data-intensive workloads. In this report, we benchmark the performance and scalability of the market-leading general-purpose NoSQL database MongoDB and its performance-oriented challenger ScyllaDB.

-

- The benchmarking study is carried out against the DBaaS offers of each database vendor to ensure a fair comparison.

- The DBaaS clusters range from 3 to 18 nodes, classified in three scaling sizes that are comparably priced.

- The benchmarking study comprises three workload types that cover read-heavy, read-update and write-heavy application domains with data set sizes from 250GB to 10TB.

- The benchmarking study compares a total of 133 performance measurements that range from throughput (per cost) to latencies to scalability.

ScyllaDB outperforms MongoDB in 132 of 133 measurements.

- For all the applied workloads, ScyllaDB provides higher throughput (up to 20 times) results compared to MongoDB.

- ScyllaDB achieves P99 latencies below 10 ms for insert, read and write operations for almost all scenarios. In contrast, MongoDB achieves P99 latencies below 10 ms only for certain read operations while the MongoDB insert and update latencies are up 68 times higher compared to ScyllaDB.

- ScyllaDB achieves up to near linear scalability while MongoDB shows less efficient horizontal scalability.

- The price-performance ratio clearly shows the strong advantage of ScyllaDB with up to 19 times better price-performance ratio depending on the workload and data set size.

In summary, this benchmarking study shows that ScyllaDB provides a great solution for applications that operate on data sets in the terabyte range and that require high (e.g, over 50K OPS) throughput while providing predictable low latency for read and write operations.

Access Benchmarking Tech Summary Report

About this NoSQL Benchmark

The NoSQL database landscape is continuously evolving. Over the last 15 years, it has already introduced many options and tradeoffs when it comes to selecting a high performance and scalable NoSQL database. In this report, we address the challenge of selecting a high performance database by evaluating two popular NoSQL databases: MongoDB, the market leading general purpose NoSQL database and ScyllaDB, the high-performance NoSQL database for large scale data. See our technical comparison for an in-depth analysis of MongoDB’s and ScyllaDB’s data model, query languages and distributed architecture.

For this project, we benchmarked both database technologies to get a detailed picture of their performance, price-performance and scalability capabilities under different workloads. For creating the workloads, we use the YCSB, an open source and industry standard benchmark tool. Database benchmarking is often said to be non-transparent and to compare apples with pears. In order to address these challenges, this benchmark comparison is based on benchANT’s scientifically proven Benchmarking-as-a-Service platform. The platform ensures a reproducible benchmark process (for more details, see the associated research papers on Mowgli and benchANT) which follows established guidelines for database benchmarking.

This benchmarking project was carried out by benchANT and was sponsored by ScyllaDB with the goal to provide a fair, transparent and reproducible comparison of both database technologies. For this purpose, all benchmarks were carried out on the database vendors’ DBaaS offers, namely MongoDB Atlas and ScyllaDB Cloud, to ensure a comparable production ready database deployment. Further, the applied benchmarking tool was the standard Yahoo! Cloud Serving benchmark and all applied configuration options are exposed.

In order to ensure full transparency and also reproducibility of the presented results, all benchmark results are publicly available on GitHub. This data contains the raw performance measurements as well as additional metadata such DBaaS instance details and VM details for running the YCSB instances. The interested reader will be able to reproduce the results on their own even without the benchANT platform.

MongoDB vs ScyllaDB Benchmark Results Overview

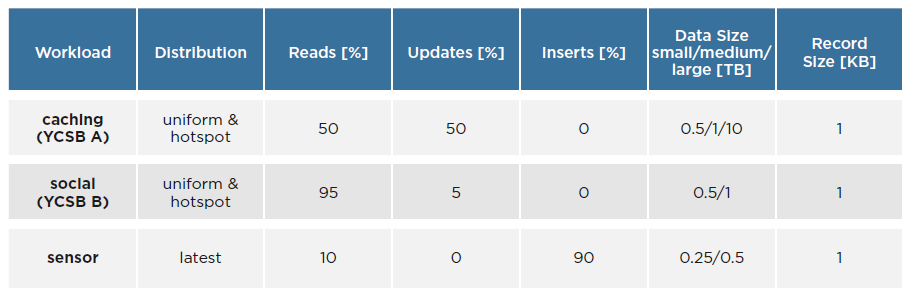

The complete benchmark covers three workloads: social, caching, and sensor.

- The social workload is based on the YCSB Workload B. It creates a read-heavy workload, with 95% read operations and 5% update operations. We use two shapes of this workload, which differ in terms of the request distribution patterns, namely uniform and hotspot distribution. These workloads are executed against the small database scaling size with a data set of 500GB and against the medium scaling size with a data set of 1TB.

- The caching workload is based on the YCSB Workload A. It creates a read-update workload, with 50% read operations and 50% update operations. The workload is executed in two versions, which differ in terms of the request distribution patterns (namely uniform and hotspot distribution). This workload is executed against the small database scaling size with a data set of 500GB, the medium scaling size with a data set of 1TB and a large scaling size with a data set of 10TB.

- The sensor workload is based on the YCSB and its default data model, but with an operation distribution of 90% insert operations and 10% read operations that simulate a real-world IoT application. The workload is executed with the latest request distribution patterns. This workload is executed against the small database scaling size with a data set of 250GB and against the medium scaling size with a data set of 500GB.

The following summary sections capture key insights as to how MongoDB and ScyllaDB compare across different workloads and database cluster sizes. A more extensive description of results for all workloads and configurations is provided in this report.

Performance Comparison Summary: MongoDB vs ScyllaDB

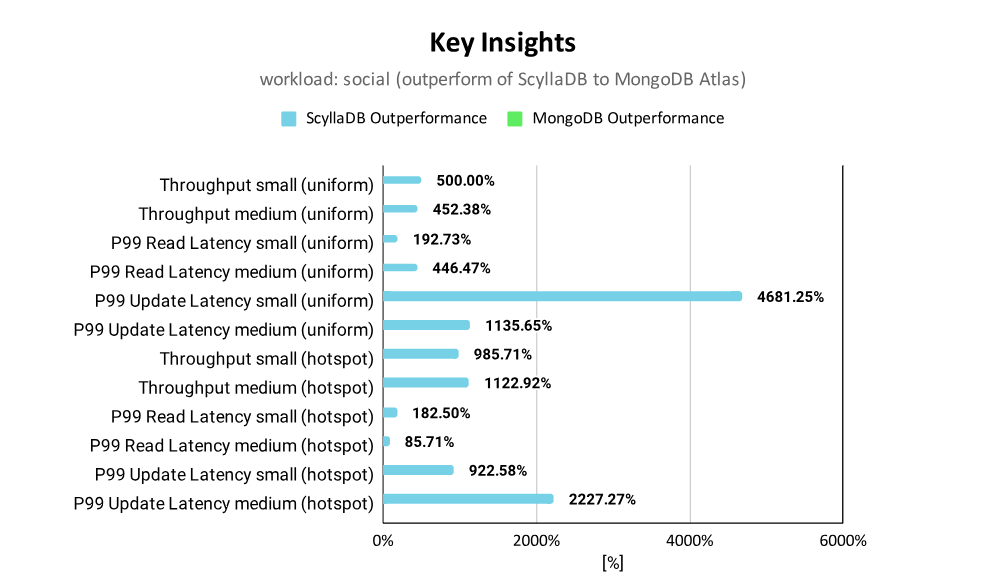

For the social workload, ScyllaDB outperforms MongoDB with higher throughput and lower latency for all measured configurations of the social workload.

- ScyllaDB provides up to 12 times higher throughput

- ScyllaDB provides significantly lower (down to 47 times) update latencies compared to MongoDB

- ScyllaDB provides lower read latencies, down to 5 times

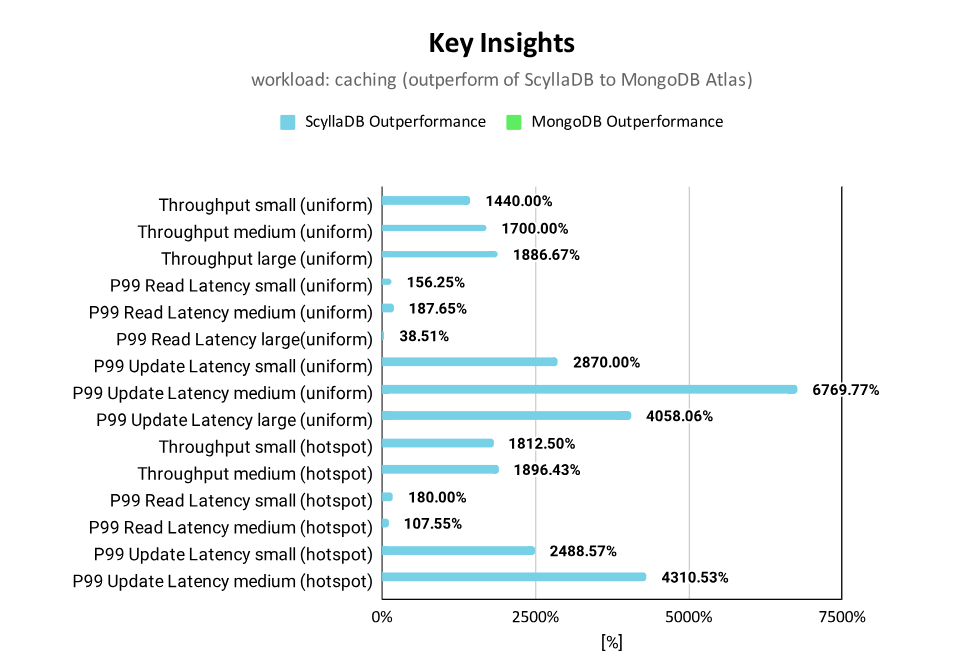

For the caching workload, ScyllaDB outperforms MongoDB with higher throughput and lower latency for all measured configurations of the caching workload.

- Even a small 3-node ScyllaDB cluster performs better than a large 18-node MongoDB cluster

- ScyllaDB provides constantly higher throughput that increases with growing data sizes to up to 20 times

- ScyllaDB provides significantly better update latencies (down to 68 times) compared to MongoDB

- ScyllaDB read latencies are also lower for all scaling sizes and request distributions, down to 2.8 times.

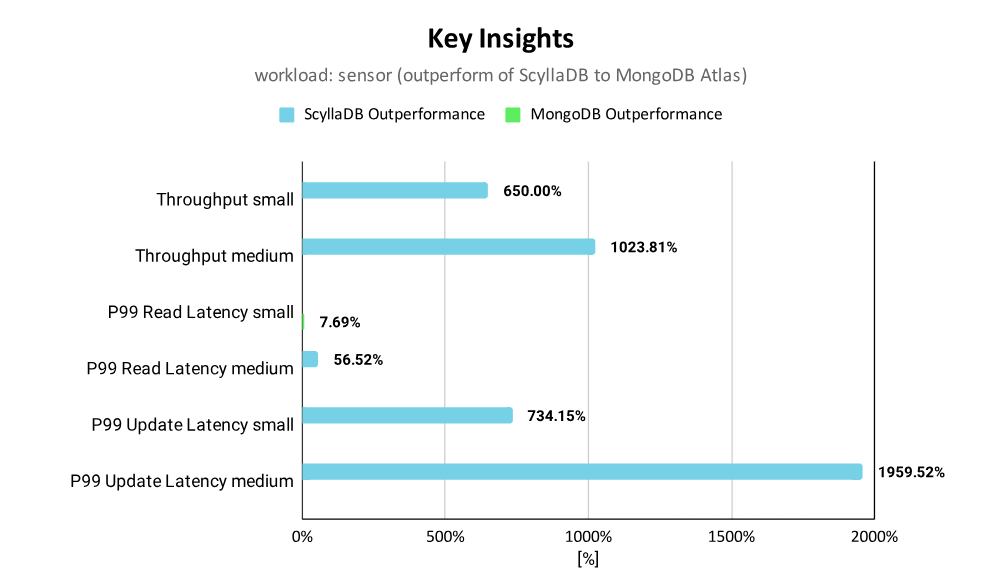

For the sensor workload, ScyllaDB outperforms MongoDB with higher throughput and lower latency results for the sensor workload except for the read latency in the small scaling size.

- ScyllaDB provides constantly higher throughput that increases with growing data sizes up to 19 times

- ScyllaDB provides lower (down to 20 times) update latency results compared to MongoDB

- MongoDB provides lower read latency for the small scaling size, but ScyllaDB provides lower read latencies for the medium scaling size

Scalability Comparison Summary: MongoDB vs ScyllaDB

Note that the following sections highlight charts for the hotspot distribution; to see results for other distributions, view the complete report.

For the social workload, ScyllaDB achieves near linear scalability by achieving a throughput scalability of 386% (of the theoretically possible 400%). MongoDB achieves a scaling factor of 420% (of the theoretically possible 600%) for the uniform distribution and 342% (of the theoretically possible 600%) for the hotspot distribution.

For the caching workload, ScyllaDB achieves near linear scalability across the tests. MongoDB achieves 350% of the theoretically possible 600% for the hotspot distribution and 900% of the theoretically possible 2400% for the uniform distribution.

For the sensor workload, ScyllaDB achieves near linear scalability with a throughput scalability of 393% of the theoretically possible 400%. MongoDB achieves a throughput scalability factor of 262% out of the theoretically possible 600%.

Price-Performance Results Summary: MongoDB vs ScyllaDB

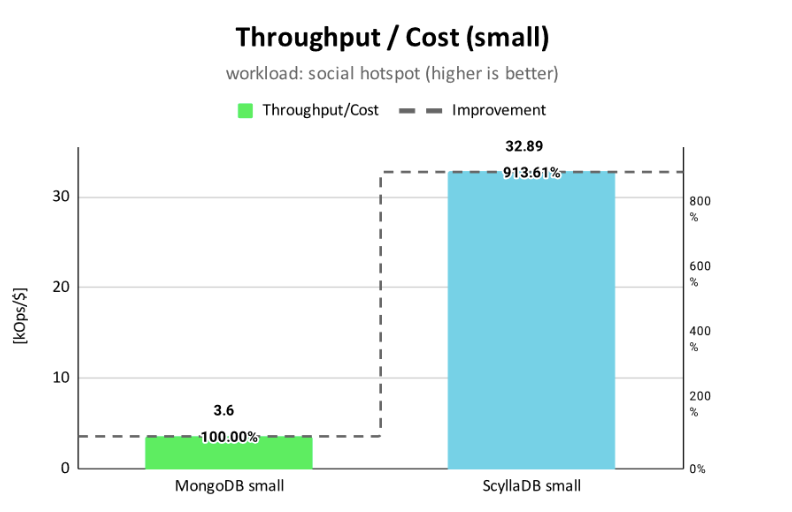

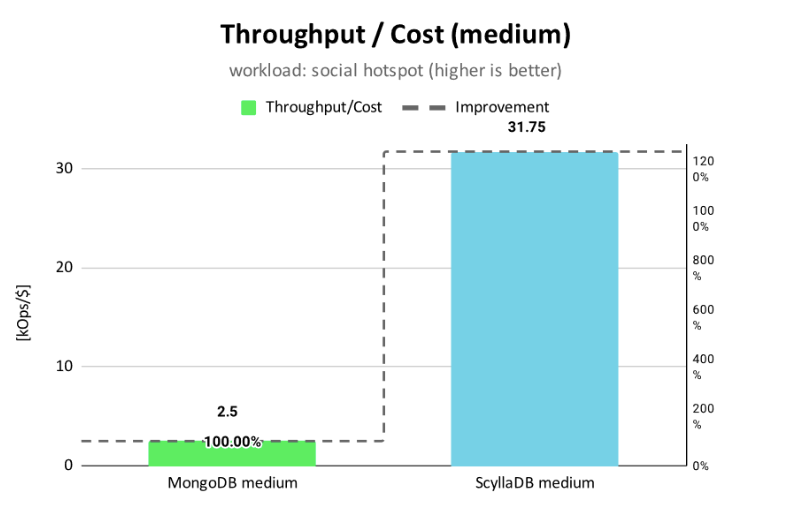

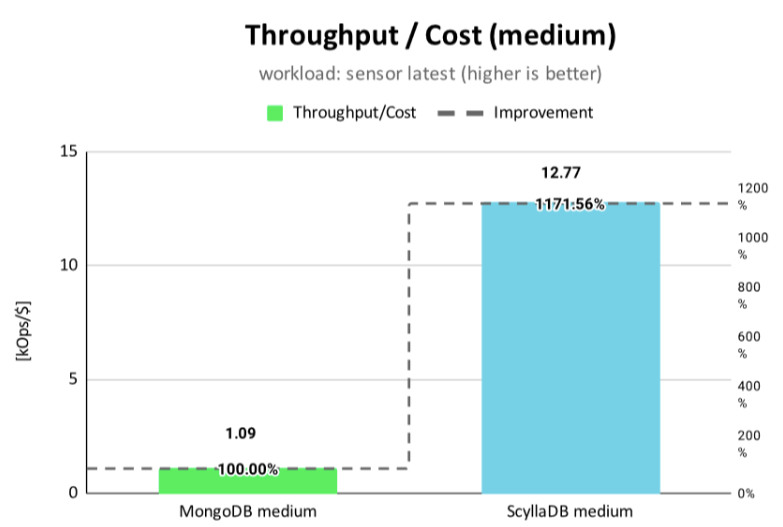

For the social workload, ScyllaDB provides five times more operations/$ compared to MongoDB Atlas for the small scaling size and 5.7 times more operations/$ for the medium scaling size. For the hotspot distribution, ScyllaDB provides 9 times more operations/$ for the small scaling size and 12.7 times more for the medium scaling size.

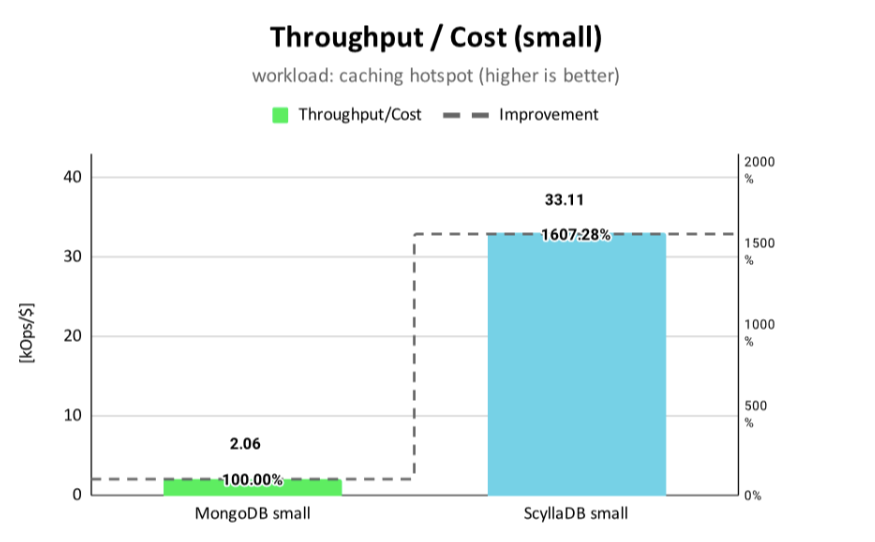

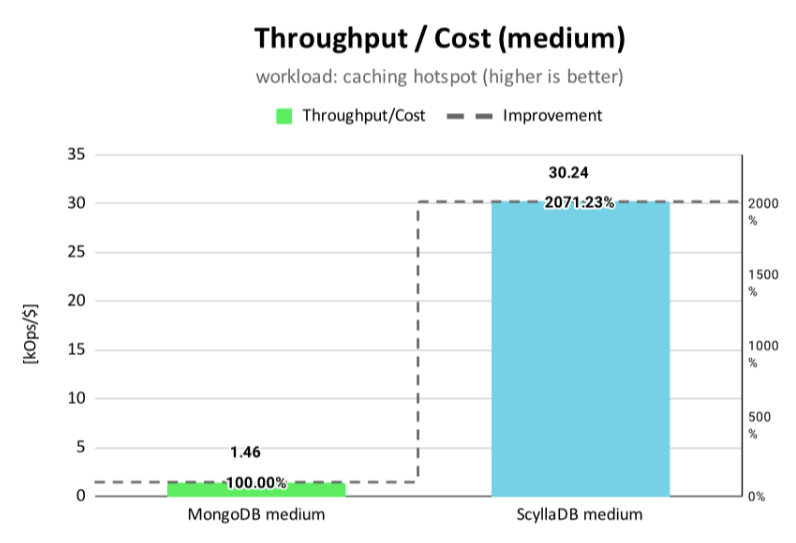

For the caching workload, ScyllaDB provides 12-16 times more operations/$ compared to MongoDB Atlas for the small scaling size and 18-20 times more operations/$ for the scaling sizes medium and large.

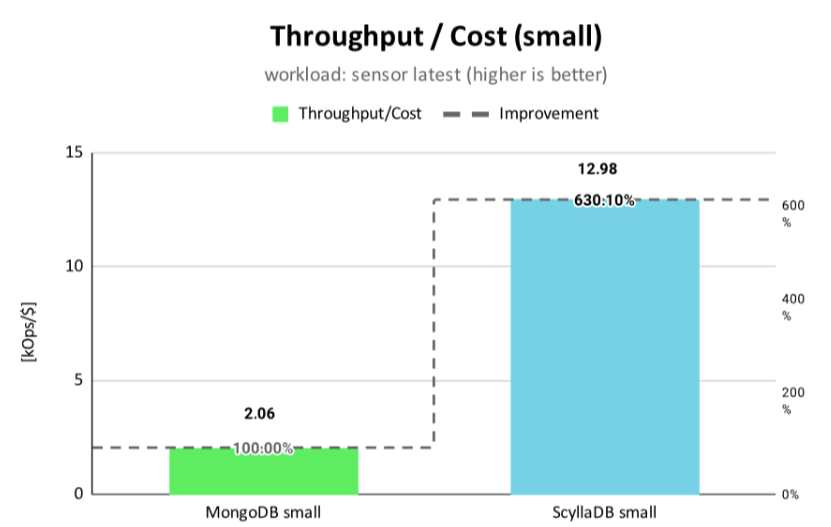

For the sensor workload, ScyllaDB provides 6-11 times more operations/$ compared to MongoDB Atlas. In both the caching and sensor workloads, MongoDB is able to scale the throughput with growing instance/cluster sizes, but the preserved operations/$ are decreasing.

Technical Nuggets (Caching Workload)

12 Hour Run

In addition to the default 30 minute benchmark run, we also select the scaling size large with the uniform distribution for a long-running benchmark of 12 hours.

For MongoDB, we select the determined 8 YCSB instances with 100 threads per YCSB instance and run the caching workload in uniform distribution for 12 hours with a target throughput of 40 kOps/s.

The throughput results show that MongoDB provides the 40 kOps/s constantly over time as expected.

The P99 read latencies over the 12 hours show some peaks in the latencies that reach 20ms and 30ms and an increase of spikes after 4 hours runtime. On average, the P99 read latency for the 12h run is 8.7 ms; for the regular 30 minutes run, it is 5.7 ms.

The P99 update latencies over the 12 hours show a spiky pattern over the entire 12 hours with peak latencies of 400 ms. On average, the P99 update latency for the 12h run is 163.8 ms while for the regular 30 minutes run it is 35.7 ms.

For ScyllaDB, we select the determined 16 YCSB instances with 200 threads per YCSB instance and run the caching workload in uniform distribution for 12 hours with a target throughput of 500 kOps/s.

The throughput results show that ScyllaDB provides the 500 kOps/s constantly over time as expected.

The P99 read latencies over the 12 hours stay constantly below 10 ms except for one peak of 12 ms. On average, the P99 read latency for the 12h run is 7.8 ms.

The P99 update latencies over the 12 hours show a stable pattern over the entire 12 hours with an average P99 latency of 3.9 ms.

Insert Performance

In addition to the three defined workloads, we also measured the plain insert performance for the small scaling size (500 GB), medium scaling size (1 TB) and large scaling size (10 TB) into MongoDB and ScyllaDB. It needs to be emphasized that batch inserts were enabled for MongoDB but not for ScyllaDB (since YCSB does not support it for ScyllaDB).

The following results show that for the small scaling size, the achieved insert throughput is on a comparable level. However, for the larger data sets of the medium and large scaling sizes, ScyllaDB achieves a 3 times higher insert throughput for the medium size benchmark. For the large-scale benchmark, MongoDB was not able to fully ingest the full 10TB of data due to client side errors, resulting in only 5TB inserted data (for more details, see Throughput Results). Yet, ScyllaDB outperforms MongoDB by a factor of 5.

Client Consistency Performance Impact

Client Consistency Performance Impact

In addition to the standard benchmark configurations, we also run the caching workload in the uniform distribution with weaker consistency settings. Namely, we enable MongoDB to read from the secondaries (readPreference=secondarypreferred) and for ScyllaDB we set the readConsistency=ONE.

The results show an expected increase in throughput: for ScyllaDB 23% and for MongoDB 14%. This throughput increase is lower compared to the client consistency impact for the social workload since the caching workload is only a 50% read workload and only the read performance benefits from the applied weaker read consistency settings. It is also possible to further increase the overall throughput by applying weaker write consistency settings.

Technical Nuggets (Social Workload)

Data Model Performance Impact

The default YCSB data model is composed of a primary key and a data item with 10 fields of strings that results in a document with 10 attributes for MongoDB and a table with 10 columns for ScyllaDB. We analyze how performance changes if a pure key-value data model is applied for both databases: a table with only one column for ScyllaDB and a document with only one field for MongoDB

The results show that for ScyllaDB the throughput improves by 24% while for MongoDB the throughput increase is only 5%.

Client Consistency Performance Impact

All standard benchmarks are run with the MongoDB client consistency writeConcern=majority/readPreference=primary and for ScyllaDB with writeConsistney=QUORUM/readConsistency=QUORUM. Besides these client consistent configurations, we also analyze the performance impact of weaker read consistency settings. For this, we enable MongoDB to read from the secondaries (readPreference=secondarypreferred) and set readConsistency=ONE for ScyllaDB.

The results show an expected increase in throughput: for ScyllaDB 56% and for MongoDB 49%.

Technical Nuggets (Sensor Workload)

Performance Impact of the Data Model

The default YCSB data model is composed of a primary key and a data item with 10 fields of strings that results in documents with 10 attributes for MongoDB and a table with 10 columns for ScyllaDB. We analyze how performance changes if a pure key-value data model is applied for both databases: a table with only one column for ScyllaDB and a document with only one field for MongoDB keeping the same record size of 1 KB.

Compared to the data model impact for the social workload, the throughput improvements for the sensor workload are clearly lower. ScyllaDB improves the throughput by 8% while for MongoDB there is no throughput improvement. In general, this indicates that using a pure k-v improves the performance of read-heavy workloads rather than write-heavy workloads.

Conclusion: Performance, Costs, and Scalability

The complete benchmarking study comprises 133 performance and scalability measurements that compare MongoDB against ScyllaDB. The results show that ScyllaDB outperforms MongoDB for 132 of the 133 measurements.

For all of the applied workloads, namely caching, social and sensor, ScyllaDB provides higher throughput (up to 20 times) and better throughput scalability results compared to MongoDB. Regarding the latency results, ScyllaDB achieves P99 latencies below 10 ms for insert, read and update operations for almost all scenarios. In contrast, MongoDB only achieves P99 latencies below 10 ms for certain read operations while the insert and update latencies are up 68 times higher compared to ScyllaDB. These results validate the claim that ScyllaDB’s distributed architecture is able to provide predictable performance at scale (as explained in the benchANT MongoDB vs ScyllaDB technical comparison).

The scalability results show that both database technologies scale horizontally with growing workloads. However, ScyllaDB achieves nearly linear scalability while MongoDB shows a less efficient horizontal scalability. The ScyllaDB results were to a certain degree expected based on its multi-primary distributed architecture while a near linear scalability is still an outstanding result. Also for MongoDB, the less efficient scalability results are expected due to the different distributed architecture (as explained in the benchANT MongoDB vs ScyllaDB technical comparison).

When it comes to price/performance, the results show a clear advantage for ScyllaDB with up to 19 times better price/performance ratio depending on the workload and data set size. In consequence, achieving comparable performance to ScyllaDB would require a significantly larger and more expensive MongoDB Atlas cluster.

In summary, this benchmarking study shows that ScyllaDB provides a great solution for applications that operate on data sets in the terabytes range and that require high throughput (e.g., over 50K OPS) and predictable low latency for read and write operations. While this study does not consider the performance impact of advanced data models (e.g. time series or vectors) or complex operation types (e.g.aggregates or scans) which are subject to future benchmark studies. But also for these aspects, the current results show that carrying out an in-depth benchmark before selecting a database technology will help to select a database that significantly lowers costs and prevents future performance problems.

Access Benchmarking Tech Summary Report

See All Details on the benchANT Site

MongoDB vs ScyllaDB in the Real World

Here are some specific examples of how performance compares in the real world:

- TRACTIAN – 10x throughput with 17x lower latency: Tractian needed to upgrade their real-time machine learning environment to support an aggressive increase in data throughput. Benchmarking at 160,000 OPS, they found that ScyllaDB could support a 10X increase in throughput with 17X lower latency than MongoDB – with similar infrastructure costs.

- Augury – From seconds to milliseconds: Augury originally built their predictive services on top of MongoDB, but as the company grew, the dataset reached the limits of MongoDB. They migrated their analytics use cases to ScyllaDB, and also discovered that ScyllaDB could support an OLTP “machine trends dashboard” use case with millisecond response times.

- Numberly – 5x less infrastructure: For Numberly’s real-time ID matching use case, MongoDB’s primary/secondary architecture could not sustain the required write throughput and meet latency SLAs. By replacing a 15-node MongoDB cluster with a 3-node ScyllaDB one, they meet SLAs with less hassle & cost

More ScyllaDB vs MongoDB Comparison

Appendix: Raw Benchmark Results

In order to ensure full transparency and also reproducibility of the presented results, all benchmark results are publicly available on GitHub. This data contains the raw performance measurements as well as additional metadata such DBaaS instance details and VM details for running the YCSB instances.

Appendix: Benchmark Setup, Process, and Limits

DBaaS Setup

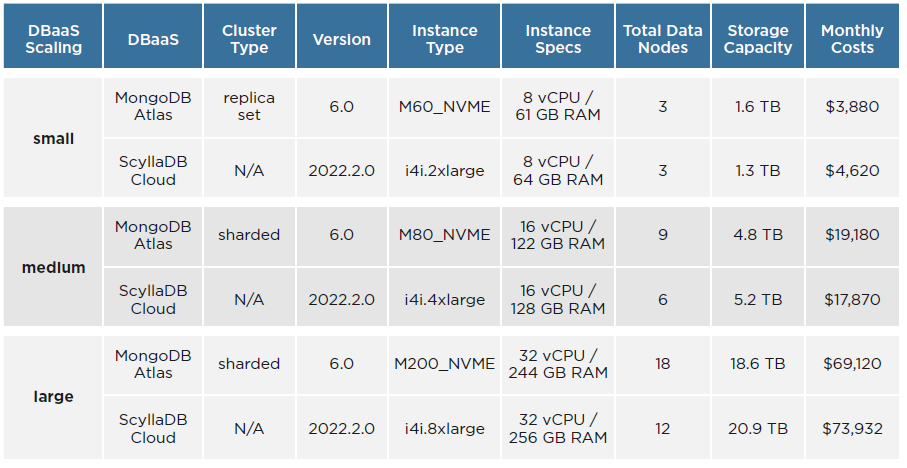

Starting from our technical comparison, MongoDB and ScyllaDB follow different distributed architecture approaches. Consequently, a fair comparison can only be achieved by selecting comparable cluster sizes in terms of costs/month or total compute power. Our comparison selects comparably priced cluster sizes with comparable instance types, having the goal to compare the provided performance (throughput and latency) as well as scalability for three cluster sizes under growing workloads.

The following table describes the selected database cluster sizes to be compared and classified into the scaling sizes small, medium and large. All benchmarks are run on AWS in the us-west region and the prices are based on the us-west-1 (N. California) region, which means that the DBaaS instances and the VMs running the benchmark instances are deployed in the same region. VPC peering is not activated for MongoDB or ScyllaDB. For MongoDB all benchmarks were run again version 6.0, for ScyllaDB against 2022.2. The period in which the benchmarks were carried out was March to June 2023.

Workload Setup

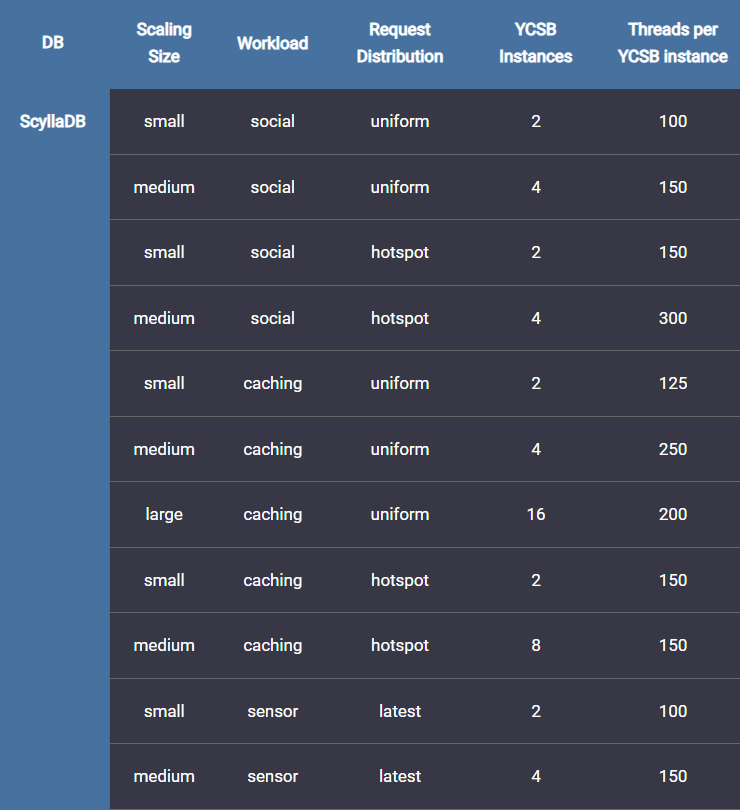

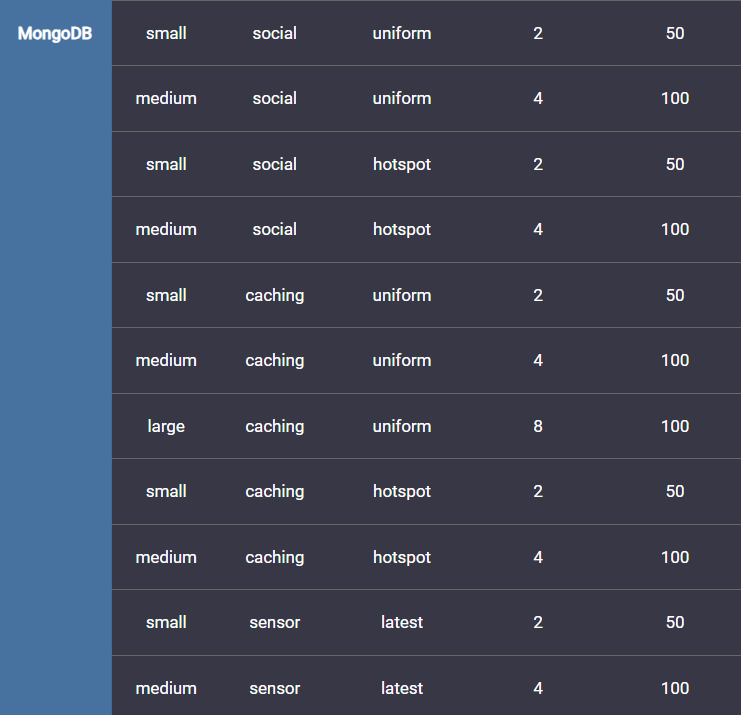

In order to simulate realistic workloads, we use YCSB in the latest available version 0.18.0-SNAPSHOT from the original GitHub repository. Based on YCSB, we define three workloads that map to real world use cases. The key parameters of each workload are shown in the following table, and the full set of applied parameters is available in the GitHub repository.

The caching and social workloads are executed with two different request patterns: The uniform request distribution simulates a close-to-zero cache hit ratio workload, and the hotspot distribution simulates an almost 100% cache hit workload.

All benchmarks are defined to run with a comparable client consistency. For MongoDB, the client consistency writeConcern=majority and readPreference=primary is applied. For ScyllaDB, writeConsistency=QUORUM and readConsistency=QUORUM are used. For more details on the client consistencies, read some of the technical nuggets below and also, feel free to read our detailed comparison of the two databases. In addition, we have also analyzed the performance impact of weaker consistency settings for the social and caching workload.

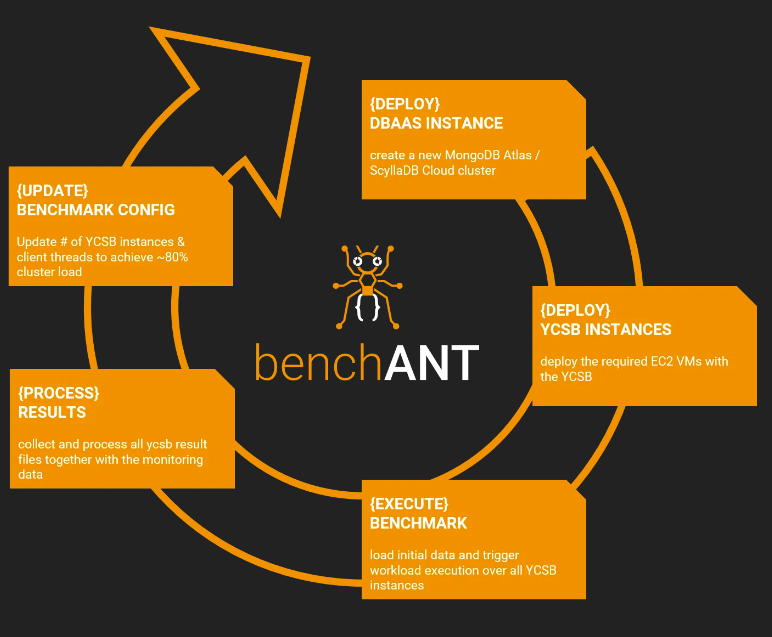

Benchmark Process

In order to achieve a realistic utilization of the benchmarked database, each workload is scaled with the target database cluster (i.e. small, medium and large). For this, the data set size, the number of YCSB instances and the number of client threads is scaled accordingly to achieve 70-80% load with a stable throughput on the target database cluster.

Each benchmark run is carried out by the benchANT platform that handles the deployment of the required number of EC2 c5.4xlarge VMs with 16 vCores and 32GB RAM to run the YCSB instances, deployment of the DBaaS instances and orchestration of the LOAD and RUN phases of YCSB. After loading the data into the DB, the cluster is given a 15-minute stabilization time before starting the RUN phase executing the actual workload. In addition, we configured one workload to run for 12 hours to ensure the benchmark results are also valid for long running workloads.

For additional details on the benchmarking methodology (for example, how we identified the optimal throughput per database and scaling size), see the full report on the benchANT site.

Limitations of the Comparison

The goal of this benchmark comparison focuses on performance and scalability in relation to the costs. It is by no means an all-encompassing guide on when to select MongoDB or ScyllaDB. Yet, by combining the insights of the technical comparison with the results of this benchmark article, the reader gets a comprehensive decision base.

YCSB is a great benchmarking tool for relative performance comparisons. However, when it comes to measuring absolute latencies under steady throughput, it is affected by the coordinated omission problem. The latest release of the YCSB introduces an approach to address this problem. Yet, during the benchmark dry runs, it turned out that this feature is not working as expected (unrealistic high latencies were reported).

In the early (and also later) days of cloud computing, the performance of cloud environments was known to be volatile. This required experimenters to repeat experiments several times at different times of the day. Only then were they able to gather statistically meaningful results. Recent studies such as the one by Scheuner and Leitner show that this has changed. AWS provides particularly stable service quality. Due to that, all experiments presented here were executed once.

Appendix: Benchmarking Process

The process of database benchmarking is still a popular and ongoing topic in research and industry. With benchANT’s roots in research, the concepts of transparent and reproducible database benchmarking are applied in this report’s context to ensure a fair comparison.

When benchmarking database systems for their performance (i.e. throughput and latency), there are two fundamental objectives:

- Finding the maximum achievable throughput for different database instances

- Finding the best read and write latency for different database instances

For objective 1, the workload is executed without a throughput limitation and with sufficient workload instances to push the database instance to the maximum available throughput. In this scenario, the latency results are only secondary. Latencies are expected to fluctuate over the benchmark runtime due to the continuously high load on the database system.

For objective 2, the workload is run with a target throughput that ensures stable load on the cluster and the latency results are in primary focus. The challenge in this approach lies in identifying the required number of benchmark instances and client threads per instance to achieve the desired cluster utilization. It is noteworthy that many benchmarking tools are affected by the coordinated omission problem. In a nutshell, the reported latencies of affected benchmarking suites might report embellished latencies that are still valid for a relative comparison but might not hold for absolute latencies. The YCSB does not yet resolve the coordinated omission problem (find more details in the YCSB changelog and our reported issue). Consequently, the measured latencies should be taken with a grain of salt when it comes to absolute latencies.

In this comparison, we follow approach 2. And since the benchANT framework fully automates the benchmark process, it is very easy to run multiple benchmarks with varying workload intensities to identify the optimal throughput of each database, i.e. a constant throughput rate that still provides stable latencies. For MongoDB and ScyllaDB, this results in ~80-85% CPU cluster utilization.

The following figure illustrates on a high level the technical benchmarking tasks that are automated by the benchANT platform.

- The DEPLOY DBaaS INSTANCE step creates a new ScyllaDB/MongoDB Atlas instance based on the provided deployment parameters. In addition, this step also enables sharding on the YCSB collection level for MongoDB and creates the table YCSB for ScyllaDB.

- The DEPLOY YCSB INSTANCE step deploys the specified number of YCSB instances on AWS EC2 with a mapping of one YCSB instance to one VM.

- The EXECUTE BENCHMARK step orchestrates the YCSB instances to load the database instances with the initial data set, ensuring at least 15 minutes of stabilization time before executing the actual workload (caching/social/sensor).

- The PROCESS RESULTS step collects all YCSB benchmark results from the YCSB instances and processes them into one data frame. In addition, monitoring data from MongoDB Atlas is automatically collected and processed to analyze the cluster utilization in relation to the applied workload. For ScyllaDB, it is currently not possible to extract the monitoring data, so we manually inspect the monitoring dashboard on ScyllaDB cloud. In general, both DBaaS come with powerful monitoring dashboards, as shown in the following example screenshots. The goal of the analysis is to determine a workload intensity for MongoDB and ScyllaDB that results in ~85% database utilization.

- In the UPDATE BENCHMARK CONFIG step, the results of the previous analysis step are taken into account and the number of YCSB instances and client threads per instance is aligned to approach the target cluster utilization.

This process is carried out for each benchmark configuration and database until the target cluster utilization is achieved. In total, we run 133 benchmarks for MongoDB Atlas and ScyllaDB to determine the optimal constant throughput for each cluster size and each workload. The full data set of the results presented in this report is available on GitHub.

Appendix – Determined Workload Intensities

Based on the outlined benchmarking process, the following workload intensities for the target database scaling size have been determined in order to achieve ~80-90% CPU utilization across the cluster:

Appendix – YCSB Extension

During the benchmarking project, we encountered two technical problems with the applied YCSB 0.18.0-SNAPSHOT version that are described in the following.

Coordinated Omission Feature

Starting from the described coordinated omission problem in general and how we have tried to address it in this benchmarking project, there is not yet a proposed solution to the created issue on the YCSB GitHub repository.

We analyzed this problem by running not only MongoDB and ScyllaDB benchmarks with the parameter measurement.interval=both but also for other databases such as PostgreSQL or Couchbase that also resulted in unrealistic high latencies of >100s.

Due to time and resource constraints, we have not debugged the current YCSB code for further details.

Support for Data Sets of >2.1 TB

While running the scaling size large benchmarks with a target data set size of 10 TB, it turned out that the YCSB is currently limited to data sets of 2.1 TB due to the usage of the data type int for the recordCount attribute in several places in the code. This issue is also reported in a YCSB issue.

We have resolved this problem in the benchANT YCSB fork that has been applied for the ScyllaDB caching large benchmarks. The changes will be contributed back to the original YCSB repository.