WebAssembly: Putting Code and Data Where They Belong

Brian Sletten and Piotr Sarna chat about Wasm + data trends at ScyllaDB Summit

ScyllaDB Summit isn’t just about ScyllaDB. It’s also a space for learning about the latest trends impacting the broader data and the database world. Case in point: the WebAssembly discussions at ScyllaDB Summit 23.

Free Registration for ScyllaDB Summit 24

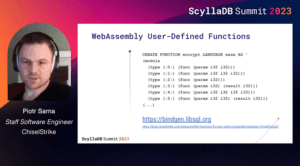

Last year, the conference featured two talks that addressed Wasm from two distinctly different perspectives: Brian Sletten (WebAssembly: The Definitive Guide author and President at Bosatsu Consulting) and Piotr Sarna (maintainer of libSQL, which supports Wasm user-defined functions and compiles to WebAssembly).

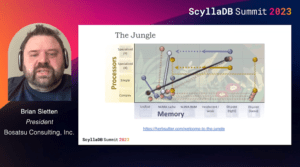

Brian’s Everything in its Place: Putting Code and Data Where They Belong talk shared how Wasm challenges the fundamental assumption that code runs on computers and data is stored in databases. And Piotr’s libSQL talk introduced, well, libSQL (Turso’s fork of SQLite that’s modernized for edge computing and distributed systems) and what’s behind its Wasm-powered support for dynamic function creation and execution.

|

|

As the event unfolded, Brian and Piotr met up for a casual Wasm chat. Here are some highlights from their discussion…

How people are using WebAssembly to create a much closer binding between the data and the application

Brian: From mainframe, to client server, to extending servers with things like servlets, Perl scripts, and cgi-bin, I think we’ve just really been going back and forth, and back and forth, back and forth. The reality is that different problems require different topologies. We’re gaining more topologies to support, but also tools that give us support for those more topologies.

The idea that we can co-locate large amounts of data for long-term training sessions in the cloud avoids having to push a lot of that back and forth. But the more we want to support things like offline applications and interactions, the more we’re going to need to be able to capture data on-device and on-browser. So, the idea of being able to push app databases into the browser, like DuckDB and SQLite, and Postgres, and – I fully expect someday – ScyllaDB as well, allows us to say, “Let’s run the application, capture whatever interactions with the user we want locally, then sync it incrementally or in an offline capacity.”

We’re getting more and more options for where we co-locate these things, and it’s going to be driven by the business requirements as much as technical requirements for certain kinds of interactions. And things like regulatory compliance are a big part of that as well. For example, people might want to schedule work in Europe – or outside of Europe – for various regulatory reasons. So, there are lots of things happening that are driving the need, and technologies like WebAssembly and LLVM are helping us address that.

How Turso’s libSQL lets users get their compute closer to their data

Piotr: libSQL is actually just an embedded database library. By itself, it’s not a distributed system – but, it’s a very nice building block for one. You can use it at the edge. That’s a very broad term; to be clear, we don’t mean running software on your fridges or light bulbs. It’s just local data centers that are closer to the users. And we can build a distributed database by having lots of small instances running libSQL and then replicating to it. CRDTs – basically, this offline-first approach where users can interact with something and then sync later – is also a very interesting area. Turso is exploring that direction. And there’s actually another project called cr-sqlite that applies CRDTs to SQLite. It’s close to the approach we’d like to take with libSQL. We want to have such capabilities natively so users can write these kinds of offline-first applications and the system knows how to resolve conflicts and apply changes later.

Moving from “Web Scale” big data to a lot of small data

Brian: I think this shift represents a more realistic view. Our definition of scale is “web scale.” Nobody would think about trying to put the web into a container. Instead, you put things into the web. The idea that all of our data has to go into one container is something that clearly has an upper limit. That limit is getting bigger over time, but it will never be “all of our data.”

Protocols and data models that interlink loosely federated collections of data (on-demand, as needed, using standard query mechanisms) will allow us to keep the smaller amounts of data on-device, and then connect it back up. You’ve learned additional things locally that may be of interest when aggregated and connected. That could be an offline syncing to real-time linked data kinds of interactions.

Really, this idea of trying to “own” entire datasets is essentially an outdated mentality. We have to get it to where it needs to go, and obviously have the optimizations and engineering constraints around the analytics that we need to ask. But the reality is that data is produced in lots of different places. And having a way to work with different scenarios of where that data lives and how we connect it is all part of that story.

I’ve been a big advocate for linked data and knowledge, graphs, and things like that for quite some time. That’s where I think things like WebAssembly and linked data and architectural distribution, and serverless functions, and cloud computing and edge computing are all sort of coalescing on this fluid fabric view of data and computation.

Trends: From JavaScript to Rust, WebAssembly, and LLVM

Brian: JavaScript is a technology that began in the browser, but was increasingly pushed to the back end. For that to work, you either need a kind of flat computational environment where everything is the same, or something where we can virtualize the computation in the form of Java bytecode, or .NET, CIL, or whatever. JavaScript is part of that because we try to have a JavaScript engine that runs in all these different environments. You get better code reuse, and you get more portability in terms of where the code runs. But JavaScript itself also has some limitations in terms of the kinds of low-level stuff that it can handle (for example, there’s no real opportunity for ahead-of-time optimizations).

Rust represents a material advancement in languages for system engineering and application development. That then helps improve the performance and safety of our code. However, when Rust is built on the LLVM infrastructure, its pluggable back-end capability allows it to emit native code, emit WebAssembly, and even WASI-flavored WebAssembly. This ensures that it runs within an environment providing the capabilities required to do what it needs…and nothing else.

That’s why I think the intersection of architecture, data models, and computational substrates – and I consider both WebAssembly and LLVM important parts of those – to be a big part of solving this problem. I mean, the reason Apple was able to migrate from x86 to ARM relatively quickly is because they changed their tooling to be LLVM-based. At that point, it becomes a question of recompiling to some extent.

What’s needed to make small localized databases on the edge + big backend databases a successful design pattern

Piotr: These smaller local databases that are on this magical edge need some kind of source of truth that keeps everything consistent. Especially if ScyllaDB goes big on stronger consistency levels (with Raft), I do imagine a design where you can have these small local databases (say, libSQL instances) that are able to store user data, and users interact with them because they’re close by. That brings really low latency. Then, these small local databases could be synced to a larger database that becomes the single source of truth for all the data, allowing users to access this global state as well.

Watch the Complete WebAssembly Chat

You can watch the complete chat Brian <> Piotr chat here: