ScyllaDB on the New AWS EC2 I4i Instances: Twice the Throughput & Lower Latency

As you might have heard, AWS recently released a new AWS EC2 instance type perfect for data-intensive storage and IO-heavy workloads like ScyllaDB: the Intel-based I4i. According to the AWS I4i description, “Amazon EC2 I4i instances are powered by 3rd generation Intel Xeon Scalable processors and feature up to 30 TB of local AWS Nitro SSD storage. Nitro SSDs are NVMe-based and custom-designed by AWS to provide high I/O performance, low latency, minimal latency variability, and security with always-on encryption.”

Now that the I4i series is officially available, we can share benchmark results that demonstrate the impressive performance we achieved on them with ScyllaDB (a high-performance NoSQL database that can tap the full power of high-performance cloud computing instances).

We observed up to 2.7x higher throughput per vCPU on the new I4i series compared to I3 instances for reads. With an even mix of reads and writes, we observed 2.2x higher throughput per vCPU on the new I4i series, with a 40% reduction in average latency than I3 instances.

We are quite excited about the incredible performance and value that these new instances will enable for our customers going forward.

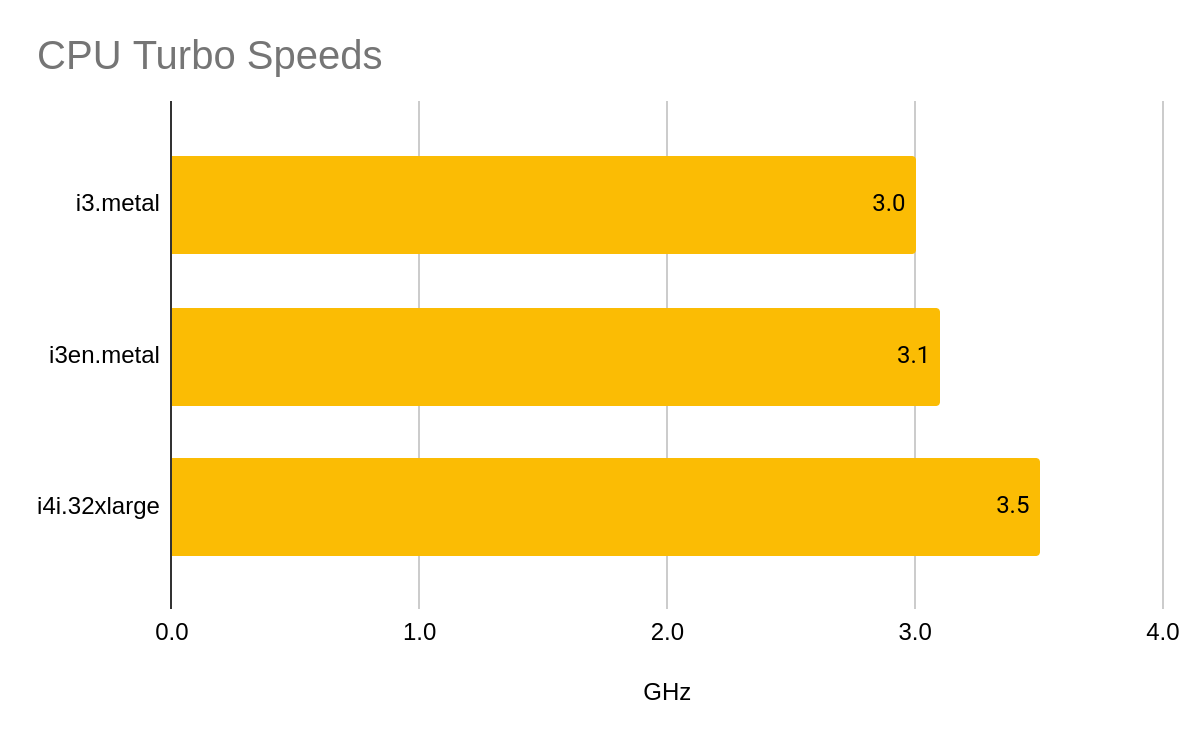

How the I4i Compares: CPU and Memory

For some background, the new I4i instances, powered by “Ice Lake” processors, have a higher CPU frequency (3.5 GHz) vs. the I3 (3.0 GHz) and I3en (3.1 GHz) series.

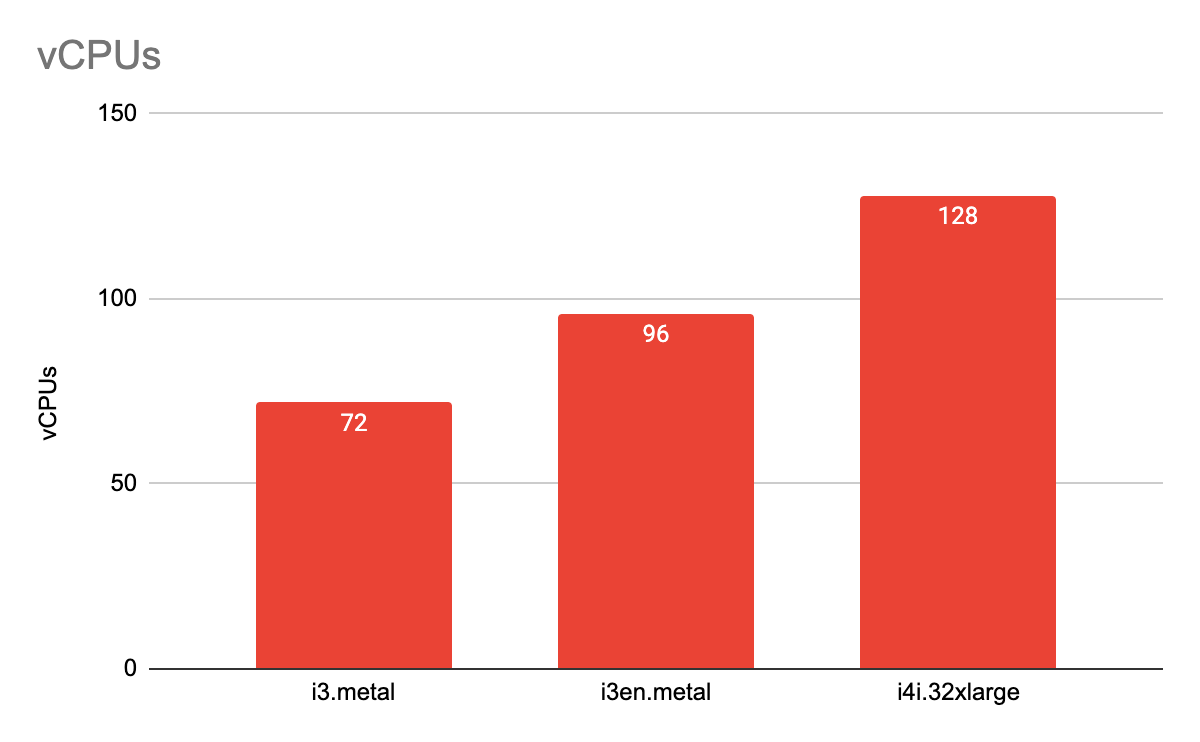

Moreover, the i4i.32xlarge is a monster in terms of processing power, capable of packing in up to 128 vCPUs. That’s 33% more than the i3en.metal, and 77% greater than the i3.metal.

We correctly predicted ScyllaDB should be able to support a high number of transactions on these huge machines and set out to test just how fast the new I4i was in practice. ScyllaDB really shines on machines with many CPUs because it scales linearly with the number of cores thanks to our unique shard-per-core architecture. Most other applications cannot take full advantage of this large number of cores. As a result, the performance of other databases might remain the same, or even drop, as the number of cores increases.

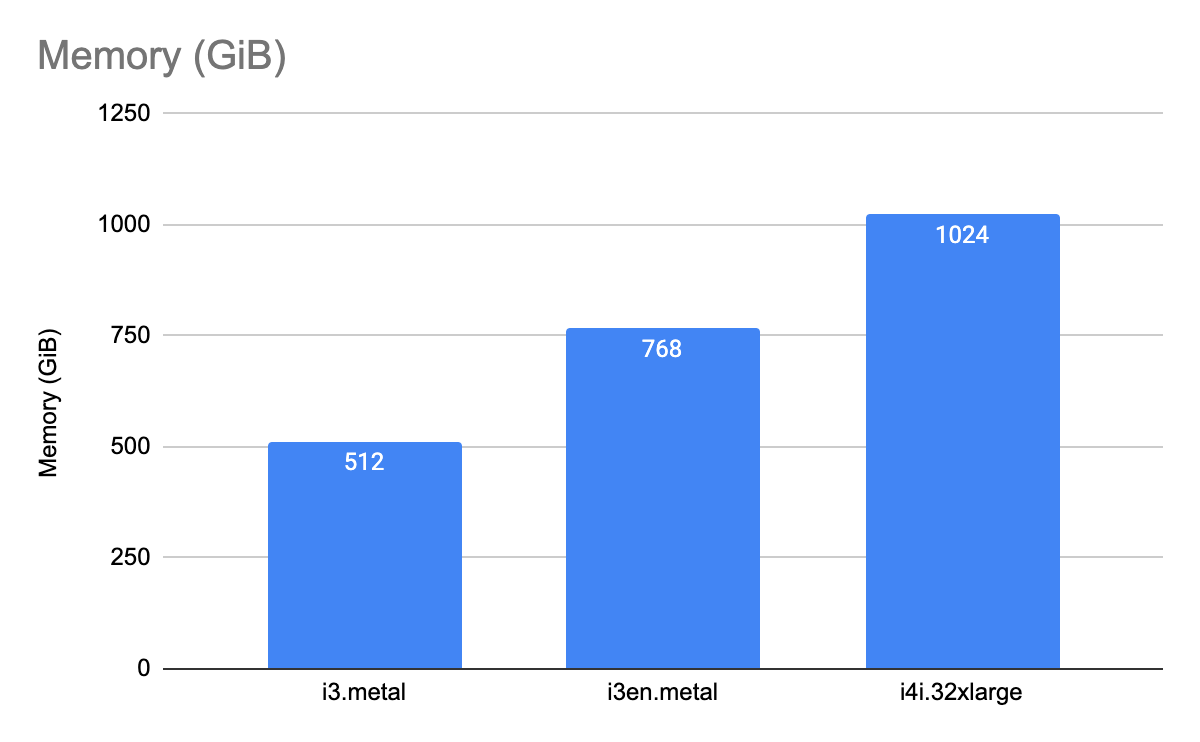

In addition to more CPUs, these new instances are also equipped with more RAM. A third more than the i3en.metal, and twice that of the i3.metal.

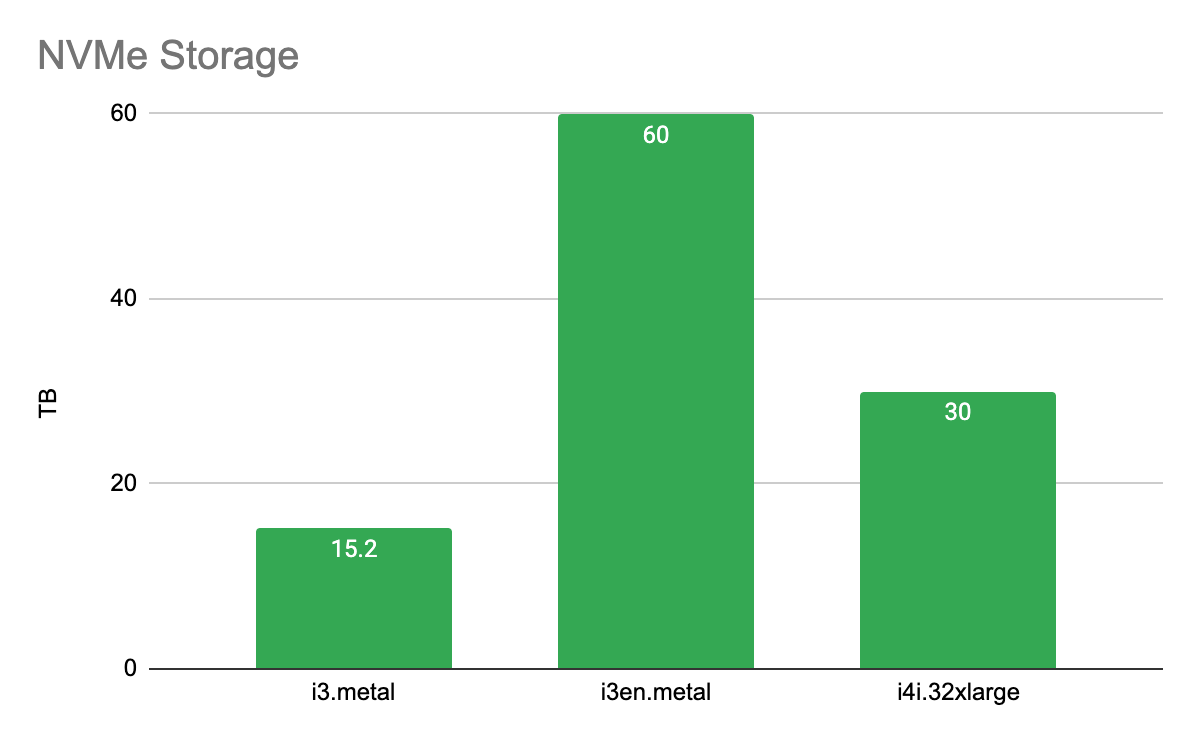

The storage density on the i4i.32xlarge (TB storage / GB RAM) is similar in proportion to the i3.metal, while the i3en.metal has more. This is as expected. In total storage, the i3.metal maxes out at 15.2 TB, the i3en.metal can store a whopping 60 TB, while the i4i.32xlarge is perfectly nestled about halfway between both, at 30 TB storage — twice the i3.metal, and half the i3en.metal. So if storage density per server is paramount to you, the I3en series still has a role to play. Otherwise, in terms of CPU count and clock speed, memory and overall raw performance, the I4i excels. Now let’s get into the details.

EC2 I4i Benchmark Results

The performance of the new I4i instances is truly impressive. AWS worked hard to improve storage performance using the new Nitro SSDs, and that work clearly paid off. Here’s how the I4i’s performance stacked up against the I3’s.

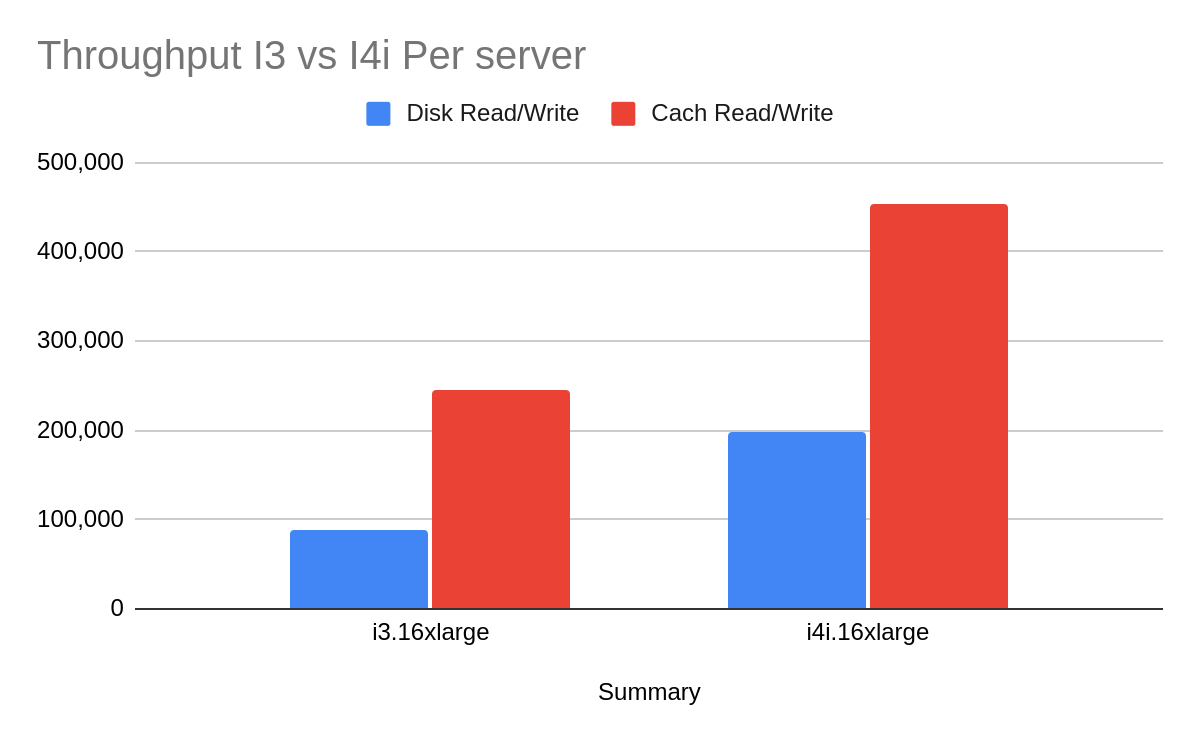

Operations per Second (OPS) throughput results on i4i.16xlarge (64 vCPU servers) vs i3.16xlarge with 50% Reads / 50% Writes (higher is better)

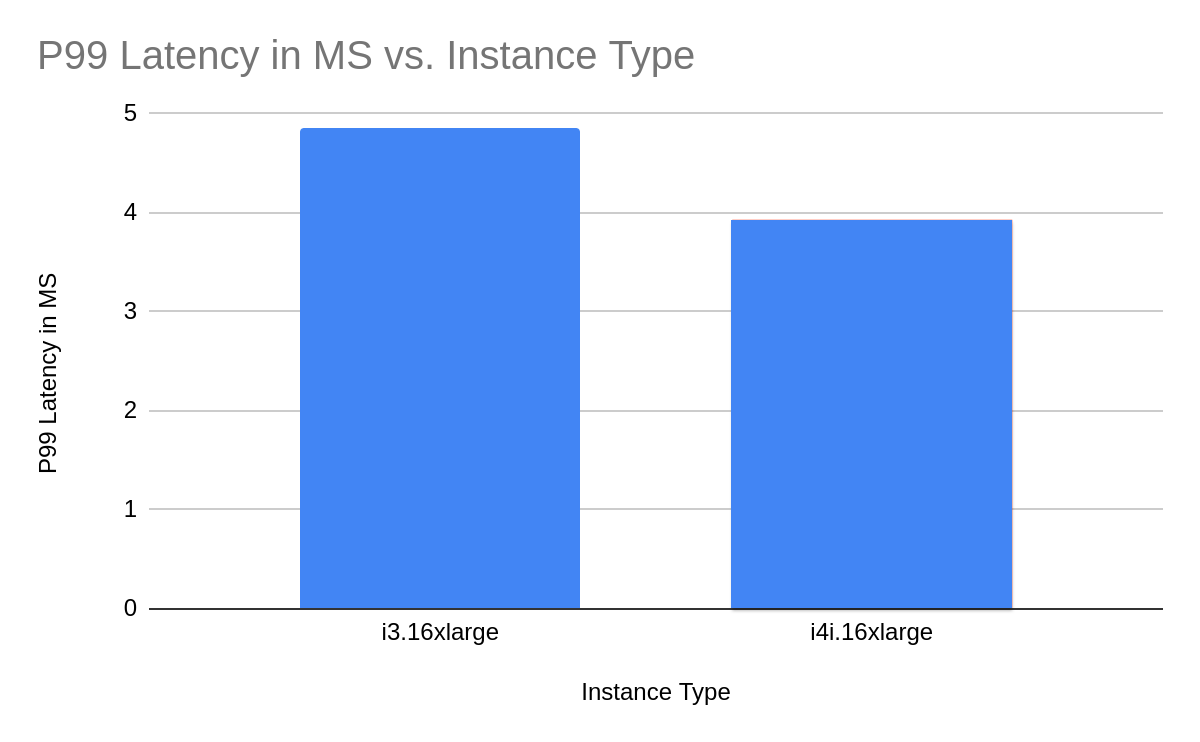

P99 latency results on i4i.16xlarge (64 vCPU servers) vs i3.16xlarge with 50% Reads / 50% Writes – latency with 50% of the max throughput (lower is better)

On a similar kind of server with the same number of cores, we achieved more than twice the throughput on the I4i – with better P99 latency.

Yes. Read that again. The long-tail latency is lower even though the throughput has more than doubled. This doubling applies to both the workloads we tested. We are really excited to see this, and look forward to seeing what an impact this makes for our customers.

Note the above results are presented per server, assuming a data replication factor of 3 (RF=3).

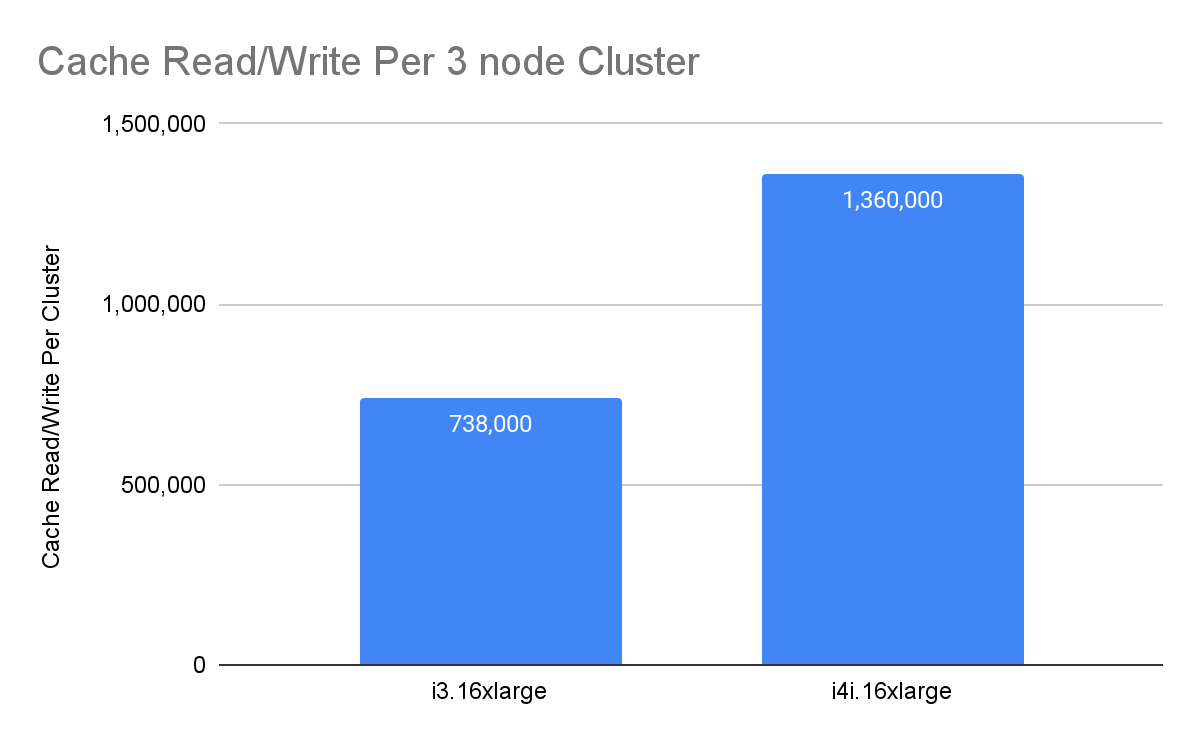

High cache hit rate performance results on i4i.16xlarge (64 vCPU servers) vs i3.16xlarge with 50% Reads / 50% Writes (3 node cluster) – latency with 50% of the max throughput

Just three I4i.16xlarge nodes support well over a million requests per second – with a realistic workload. With the higher-end i4i.32xlarge, we’re expecting at least twice that number of requests per second.

“Essentially, if you have the I4i available in your region, use it for ScyllaDB”

Essentially, if you have the I4i available in your region, use it for ScyllaDB. It provides superior performance – in terms of both throughput and latency – over the previous generation of EC2 instances.

GET STARTED WITH SCYLLADB CLOUD