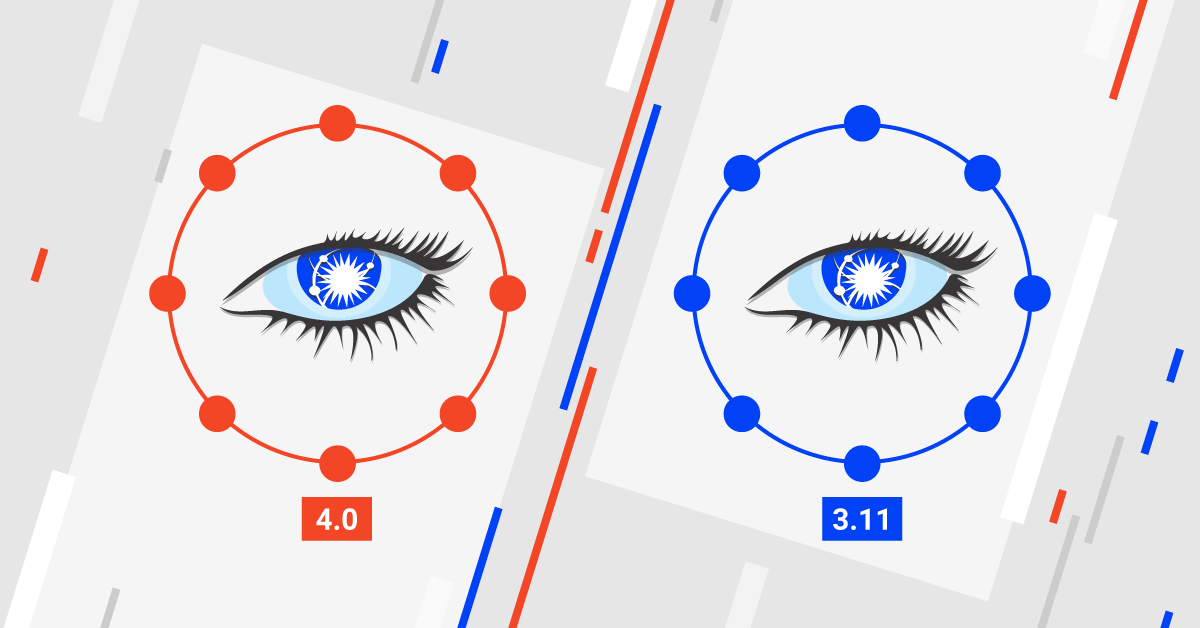

How Much Faster is Apache Cassandra 4?

After nearly six years of work, the engineers behind Apache Cassandra incremented its major version from three to four. Six years encompasses almost an entire technology cycle, with new Java virtual machines, new system kernels, new hardware, new libraries and even new algorithms. Progress in these areas presented the engineers behind Cassandra with an unprecedented opportunity to achieve new levels of performance. Did they seize it?

As engineers behind ScyllaDB, a Cassandra-compatible open source database designed from the ground up for extremely high throughput and low latency, we were curious about the performance of Cassandra 4.0. Specifically, we wanted to understand how far Cassandra 4.0 performance advanced versus Cassandra 3.11, and against ScyllaDB Open Source 4.4.3. So we put them all to the test.

We set out to test the latencies and throughputs measured for various workloads, as well as the speed of common administrative operations such as expanding clusters and running major compactions. To assess each database’s performance, we compared three-node clusters of the three databases across identical hardware (i3.4xlarge machines (48 vCPUs)) on Amazon EC2, in a single availability zone within us-east-2.

Database cluster servers were initialized with clean Amazon Machine Images running CentOS 7.9 with ScyllaDB Open Source 4.4.3 and Ubuntu 20.04 with Cassandra 4.0 or Cassandra 3.11. We selected relatively typical current generation servers on AWS so others could replicate our tests, and to reflect a real-world setup.

This article presents a technical summary of the key performance findings with respect to:

- Throughput and latency with various distributions of data

- Adding a new node

- Doubling the cluster size

- Replacing a single node

- Performing a major compaction

For complete details on all tests, including the specific configurations you can use to perform the tests yourself, see the 43-page Cassandra 4.0 benchmark report.

Throughput and Latency

The benchmarking was a series of simple invocations of cassandra-stress with CL=QUORUM. For 30 minutes, we kept firing 10,000 requests per second and monitoring the latencies. Then we increased the request rate by another 10,000 for another 30 minutes, and so on (by 20,000 in the case of larger throughputs). The procedure repeats until the database is no longer capable of withstanding the traffic, that is until cassandra-stress cannot achieve the desired throughput or until the 90-percentile latencies exceed 1 second.

We tested the databases with the following distributions of data:

- “Real-life” (Gaussian) distribution, with sensible cache-hit ratios of 30 to 60%

- Uniform distribution, with a close-to-zero cache hit ratio

- “In-memory” distribution, expected to yield almost 100% cache hits

For each of these scenarios we ran the following workloads:

- 100% writes

- 100% reads

- 50% writes and 50% reads

The following graphic highlights the maximum throughput, measured in operations per second, achieved by each database across a sampling of the scenarios and workloads.

The maximum throughput (measured in operations per second) achieved on 3 x i3.4xlarge machines (48 vCPUs)

Cassandra 4.0 yielded 10 to 20 kilobits/second throughput improvements over Cassandra 3.11 in several scenarios. ScyllaDB processed two to five times more requests than either of the Cassandra versions.

The following table summarizes the range of performance improvements achieved across every combination of scenario and workload we tested. The first column shows the range of improvements from Cassandra 3.11 to 4.0, and the second column highlights the additional performance improvements that ScyllaDB achieved over Cassandra 4.0. Each row represents the lowest and highest improvements achieved across a total of 10 benchmark tests.

For a specific example of how tests played out, let’s look at the results for 90- and 99-percentile latencies of UPDATE queries in a range of load rates with a uniform distribution (disk-intensive, low cache hit ratio):

The 90- and 99-percentile latencies of UPDATE queries, as measured on three i3.4xlarge machines (48 vCPUs in total) in a range of load rates

In this scenario, we issued queries that touched random partitions of the entire dataset (every partition in the 1 TB dataset had an equal chance of being updated). Both Cassandra 3.11 and Cassandra 4.0 quickly became functionally nonoperational, serving requests with tail latencies that exceeded 1 second. ScyllaDB maintained low and consistent write latencies up until 170,000 to180,000 operations per second.

But this is just one of many tests. As mentioned previously, our benchmarks covered three different scenarios (100% reads, 100% writes, and mixed 50% reads plus 50% writes) across three distributions of data: Gaussian distribution, uniform distribution, and “in-memory” distribution expected to yield almost 100% cache hits. See the complete benchmark report to explore how each database performed in specific tests with respect to both reads and writes.

Administrative Operations

Beyond the speed of raw performance, it’s also important to consider the speed of day-to-day administrative operations, such as adding a node to a growing cluster or replacing a node that has died. The following tests benchmarked performance for these administrative tasks.

Adding One New Node

In this benchmark, we measured how long it takes to add a new node to the cluster. The cluster was initially loaded with 1 TB of data at RF=3. The reported times are the intervals between starting a Cassandra/ScyllaDB node and having it fully finished bootstrapping (CQL port open).

The time needed to add a node to an existing three-node cluster preloaded with 1 TB of data at RF=3 (ending up with 4 i3.4xlarge machines)

It is important to note that Cassandra 4.0 includes a new feature, Zero Copy Streaming (ZCS), that allows for efficient streaming of entire SSTables (Sorted Strings Tables). An SSTable is eligible for ZCS if all of its partitions need to be transferred, which can be the case when Leveled Compaction Strategy (LCS) is enabled. To demonstrate this feature, we ran the next benchmarks with the usual Size-tiered Compaction Strategy (STCS) compared to LCS.

Doubling the Cluster Size

In this benchmark, we measured how long it takes to double the cluster node count, going from three to six nodes, ending up with six i3.4xlarge machines. The three new nodes were added sequentially, waiting for the previous one to fully bootstrap before starting the next one. The reported time spans from the instant the startup of the first new node was initiated, all the way until the bootstrap of the third new node finished.

The time needed to add three nodes to an existing three-node cluster of i3.4xlarge machines, preloaded with 1 TB of data at RF=3

Here is the timeline of adding three nodes to an existing three-node cluster.

The timeline of adding three nodes to an existing three-node cluster (ending up with six i3.4xlarge machines)

Cassandra 3.11 took 270 minutes (4.5 hours), while Cassandra 4.0 took 238 minutes 21 seconds (just shy of four hours). The total time for ScyllaDB 4.4.3 to double the cluster size was 94 minutes 57 seconds. While Cassandra 4.0 achieved an improvement over Cassandra 3.11, ScyllaDB completed the entire operation before either version of Cassandra bootstrapped its first new node.

Replacing a Single Node

In this benchmark, we measured how long it takes to replace a single node. One of the nodes was brought down and another one was started in its place. Throughout this process, the cluster was being agitated by a mixed read/write background load of 25,000 ops at CL=QUORUM.

The time needed to replace a node in a three-node cluster of i3.4xlarge machines, preloaded with 1

Performing a Major Compaction

In this benchmark, we measured how long it takes to perform a major compaction on a single node loaded with roughly 1TB of data. The result of a major compaction is the same in both ScyllaDB and Cassandra: a read is served by a single SSTable.

Major compaction of 1 TB of data at RF=1 on i3.4xlarge machine

Major compaction of 1 TB of data at RF=1 on i3.4xlarge machine

ScyllaDB uses a sharded architecture, which enables it to perform the major compactions on each shard concurrently. Cassandra is single-thread bound.

Wrapping Up

Cassandra 4.0 is an advancement from Cassandra 3.11. It is clear that Cassandra 4.0 has aptly piggy-backed on advancements to the JVM, and upgrading from Cassandra 3.11 to Cassandra 4.0 will benefit many use cases.

In our test setup, Cassandra 4.0 showed a 25% improvement for a write-only disk-intensive workload and 33% improvement for cases of read-only with either a low or high cache hit rate. Otherwise, the maximum throughput between the two Cassandra releases was relatively similar.

However, most workloads won’t be executed in maximum utilization, and the tail latency in max utilization is usually not good. In our tests, we marked the throughput performance at a service-level agreement of under 10 milliseconds in P90 and P99 latency. At this service level, Cassandra 4.0, powered by the new JVM/GC (JVM garbage collection), can perform twice that of Cassandra 3.0. Outside of sheer performance, we tested a wide range of administrative operations, from adding nodes, doubling a cluster, node removal and compaction, all of them under emulated production load. Cassandra 4.0 improves these admin operation times up to 34%.

But for data-intensive applications that require ultra-low latency with extremely high throughput, consider other options such as ScyllaDB. ScyllaDB provides the same Cassandra Query Language (CQL) interface and queries, the same drivers, even the same on-disk SSTable format, but with a modern architecture designed to eliminate Cassandra performance issues, limitations and operational barriers. ScyllaDB consistently and significantly outperformed Cassandra 4.0 on our benchmarks. On identical hardware, ScyllaDB withstood up to 5x greater traffic and offered lower latencies than Apache Cassandra 4.0 in almost every tested scenario. ScyllaDB also completed admin tasks 2.5 to 4 times faster than Cassandra 4.0.

Moreover, ScyllaDB’s feature set goes beyond Cassandra’s in many respects. The bottom line: Cassandra’s performance improved since its initial release in 2008, but ScyllaDB with its shared-nothing, shard-per-core architecture that takes full advantage of modern infrastructure and networking capabilities has leaped ahead of Cassandra.