5 More Intriguing ScyllaDB Capabilities You Might Have Overlooked

You asked for it! Here are another 5 unique ScyllaDB capabilities for power users!

We were very happy (and grateful!) to receive TONS of positive feedback when 5 Intriguing ScyllaDB Capabilities You Might Have Overlooked was published. Some readers were inspired to try out cool features they weren’t previously aware of. Others asked why some specific capability wasn’t covered. And others just wanted to discover more of these “hidden gems.” 🙂

When we first decided to write a “5 intriguing capabilities” blog, we simply featured the first 5 capabilities that came to mind. It was by no means a “top 5” ranking. Of course, there are many more little-known capabilities that are providing value to teams just like yours. Here’s another round of cool features you might not know about.

Load and Stream

The nodetool refresh command has several purposes: migrate Apache Cassandra SSTables to ScyllaDB, restore a cluster via ScyllaDB Manager, mirror a ScyllaDB cluster to another, and even rollback to a previous release when things go wrong. In a nutshell, a refresh simply loads newly placed SSTables into the system.

One of the problems with nodetool refresh is that, by default, it doesn’t account for the actual ownership of the data. That means that when you try to restore data to a cluster with different topology, replication settings, or even a different token ownership, you would need to load your data multiple times (since every refresh would discard the data not owned by the receiving node).

For example, assume you are refreshing data from a 3-node cluster to a single-node cluster. Also assume that the replication factor is 2. The correct way to move data around would be to copy the SSTables from all 3 source nodes, and – one at a time – refresh it at the destination. Failure to do so could potentially result in data being lost during the process, as we can’t be sure that the data from all 3 source nodes are in sync.

It gets worse if your destination is a 3-node cluster with different token ownership. Instead of copying and loading the data 3 times (as in the previous example), you’d have to run it 9 times in total (3 source nodes x 3 destination nodes) to properly migrate with no data loss. See the problem now?

That’s what Load and Stream addresses. The concept is really simple: Rather than ignoring and dropping the tokens the node is not responsible for, just stream it over to its rightful peer members! This simple change greatly speeds up and simplifies the process of moving data around, and is much less error prone.

We introduced Load and Stream in ScyllaDB 5.1 and extended the nodetool refresh command syntax. Note that the default behavior remains, so you must adjust the command syntax accordingly to tell your refresh command to also stream data.

Timeout per Operation

Sometimes you know beforehand that you are about to run a query that may take considerably longer than your average. Perhaps you are running an aggregation, a full table scan, or maybe your workload simply counts with distinct SLAs for different types of queries.

One Apache Cassandra limitation is the fact that timeouts are hardcoded, which makes it extremely inflexible for processing queries that might knowingly take longer than usual. The default is for the coordinator to timeout a write taking longer than 2 seconds, and a read taking longer than 5 seconds.

Although the defaults are a reasonable amount of time for writes and reads, it is worth noting that those defaults are unacceptable for many low latency use cases. Therefore, many use cases often configure stricter server-side timeout settings, and even configure context timeouts within their applications in order to prevent requests from taking too long.

In Apache Cassandra, lowering your server-side timeout settings effectively forbids you from running any kinds of queries past your configured settings. And increasing it too much makes you subject to retry storms. We needed a solution: How to prevent long-running queries from timing out while avoiding retry storms and complying with aggressive P99 latency requirements?

The answer is USING TIMEOUT, a ScyllaDB CQL extension that allows you to dynamically adjust – on a per query basis – the coordinator timeout. This allows you to notify ScyllaDB that your query should not stick with the server defaults and then the coordinator will use your provided value instead.

We introduced the USING TIMEOUT extension in ScyllaDB 4.4, and it is extremely useful when applied in conjunction with the BYPASS CACHE option discussed in the first installment of this blog.

Live Updateable Parameters

Let’s be honest: No one likes to run a cluster rolling restart whenever you have to change configuration parameters. The larger your deployment gets, the more nodes you will have to restart – and the longer it takes for a parameter to become active cluster-wide.

ScyllaDB solves this problem with live updateable parameters, which follows a Unix-like syntax to notify the node to re-read its configuration file (/etc/scylla/scylla.yaml) and refresh its configuration.

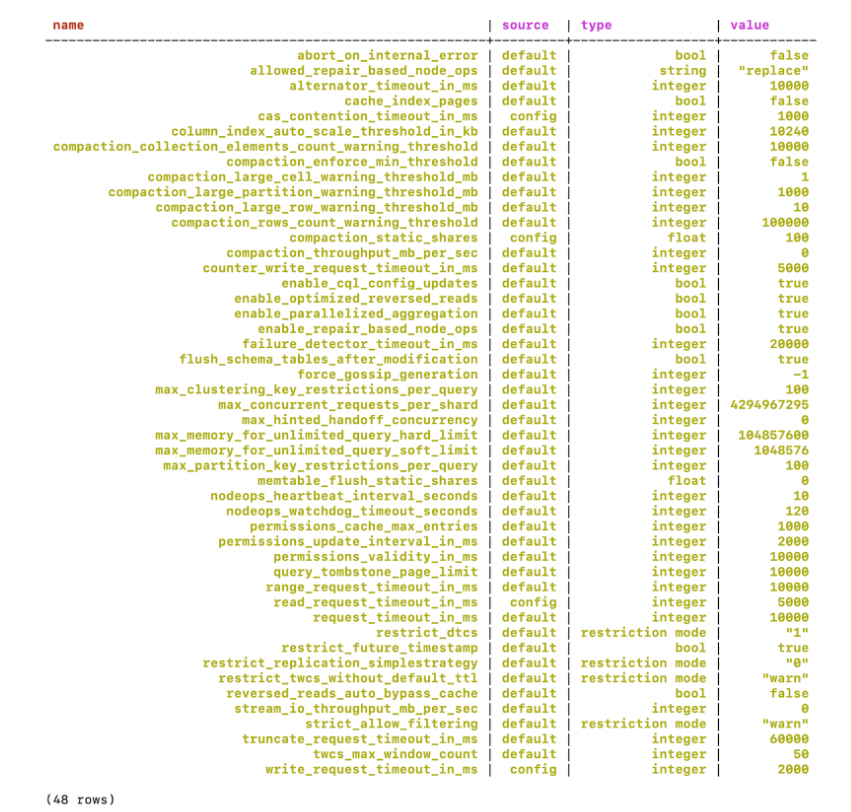

The ability for ScyllaDB to reload its runtime configuration was introduced 4 years ago and, over time, we have greatly extended its capabilities to support more features. We know it’s definitely a challenge to find out on which release every single option got introduced. To help, here’s a list of (currently) supported parameters that can get changed at database runtime. Consult the output of scylla --help for an explanation of each:

To live update a parameter, simply update its value in ScyllaDB’s configuration, and SIGHUP the ScyllaDB PID. For example:

# echo 'compaction_static_shares: 100' >> /etc/scylla/scylla.yaml

# kill -HUP $(pidof scylla)And you should see in the logs:

INFO 2023-08-07 21:55:34,515 [shard 0] init - re-reading configuration file

INFO 2023-08-07 21:55:34,524 [shard 0] compaction_manager - Updating static shares to 100

INFO 2023-08-07 21:55:34,526 [shard 0] init - completed re-reading configuration fileGuardrails

A ScyllaDB deployment is very flexible. You can create single-node clusters, use a replication factor of 1, and even write data using a timestamp in the far future to be deleted by the time robots take over human civilization. We won’t prevent you from choosing whatever setting makes sense for your deployment, as we believe you know what you are doing. 🙂

Guardrails are settings made to prevent you (or others) from shooting themselves in the foot. Just like the previous live updateable parameters we’ve seen, it is part of a continuous process to give the database administrator more control over which restrictions make sense over deployments.

Some known guardrails enabled by default are, for example, the DateTiered compaction strategy (which has long been deprecated in favor of the Time Window Compaction Strategy) and the deprecated Thrift API.

The easiest way to find out which Guardrails parameters are available for use is to simply filter by the guardrails label in our GitHub project. There, you will find all options you might be interested in, as well as which ones our Engineering team is planning to implement in the foreseeable future.

Compaction & Streaming Rate-Limiting

We previously discussed how ScyllaDB achieves performance isolation of its components and self-tunes to the workload in question. This means that under normal and ideal temperature and pressure conditions, you ideally should not have to bother with throttling background activities, such as compaction or streaming.

However, there may be some situations where you still want to throttle how much I/O each class is allowed to consume – either for a specific period of time or simply because you suspect a scheduler problem may be affecting your latencies.

ScyllaDB 5.1 introduced two newer live updateable parameters to address these situations:

compaction_throughput_mb_per_sec– Throttles compaction to the specified total throughput across the entire systemstream_io_throughput_mb_per_sec– Throttles streaming I/O (including repairs, repair-based node operations and legacy topology operations)

Note that it is not recommended to change these values from their defaults (0 – disable any throttling), as ScyllaDB should dynamically be able to choose the best bandwidth to consume according to your system load.