Cobli’s Drive From Cassandra to ScyllaDB Cloud

Dissecting a Real-Life Migration Process

Editor’s note: This is the first blog in a series; part 2 is Cobli’s Journey from Cassandra to ScyllaDB: Dissecting a Real-Life Migration Process . Cobli’s blogs were originally published on Medium in Portuguese.

It’s kind of common sense… Database migration is one of the most complex and risky operations the “cooks in the kitchen” (platform / infrastructure / OPs / SRE and surroundings) can face — especially when you are dealing with the company’s “main” database. That’s a very abstract definition, I know. But if you’re from a startup you’ve probably already faced such a situation.

Still — once in a while — the work and the risk are worth it, especially if there are considerable gains in terms of cost and performance.

The purpose of this series of posts is to describe the strategic decisions, steps taken and the real experience of migrating a database from Cassandra to ScyllaDB from a primarily technical point of view.

Let’s start with the use case.

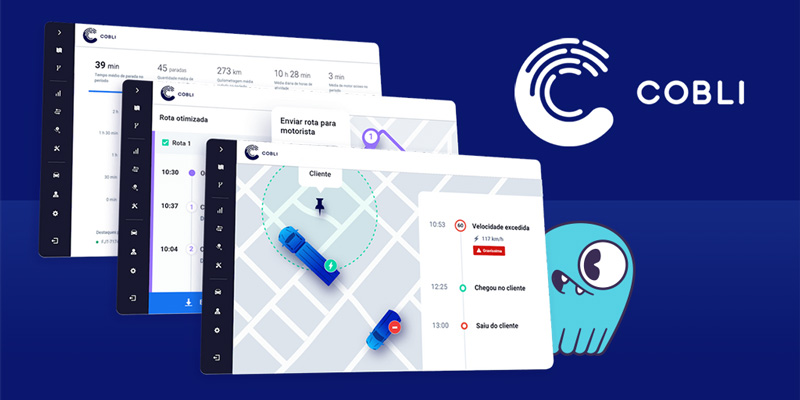

Use Case: IoT (Vehicle Fleet Telemetry)

Here at Cobli, we work with vehicle fleet telematics. Let me explain: every vehicle in our fleet has an IoT device that continuously sends data packets every few seconds.

Overall, this data volume becomes significant. At peak usage, it reaches more than 4,000 packets per second. Each package must go through triage where it is processed and stored in the shortest possible time. This processing results in information relevant to our customers (for example, vehicle overspeed alerts, journey history etc)– and this information must also be saved. Everything must be done as close as possible to “real time”.

The main requirements of a “database” for our functionalities are high availability and write capacity, a mission successfully delegated to Cassandra.

I’m not going to elaborate on all the reasons for migrating to ScyllaDB. However, I can summarize it as the search for a faster and — above all — cheaper Cassandra .

For those who are using Cassandra and don’t know ScyllaDB, this page is worth taking a look at. In short: ScyllaDB is a revamped Cassandra, focused on performance while maintaining a high level of compatibility with Cassandra (in terms of features, APIs, tools, CQL, table types and so on…).

Which ScyllaDB?

ScyllaDB is similar to Cassandra when it comes to the way it is distributed: there is an open source version, an enterprise version, and a SaaS version (database as a service or DBaaS). It’s already ingrained in Cobli’s culture: using SaaS enables us to focus on our core business. The choice for SaaS was unanimous.

The ScyllaDB world is relatively new, and the SaaS that exists is the ScyllaDB Cloud offering (we haven’t found any other… yet). We based our cluster size and cost projection calculations on the options provided by SaaS, which also simplified the process a bit.

Another point that made us comfortable was the form of integration between our infrastructure and SaaS, common to the AWS world: the Bring Your Own Account model. We basically delegate access to ScyllaDB Cloud to create resources on AWS under a specific account, but we continue to own those resources.

We made a little discovery with ScyllaDB Cloud:

- We set up a free-tier cluster linked to our AWS account

- We configured connectivity to our environment (VPC peering) and test

- We validated the operating and monitoring interfaces provided by ScyllaDB Cloud.

ScyllaDB Cloud provides a ScyllaDB Monitoring interface built into the cluster. It’s not a closed solution — it’s part of the open source version of ScyllaDB — but the advantage is that it is managed by them.

We did face a few speed bumps. One important difference compared to Cassandra: there are no metrics per table/keyspace: only global metrics. This restriction comes from the ScyllaDB core, not the ScyllaDB Cloud. It seems that there have been recent developments on that front, but we simply accepted this drawback in our migration.

Over time, we’ve also found that ScyllaDB Cloud metrics are retained for around 40/50 days. Some analyses may take longer than that. Fortunately, it is possible to export the metrics in Prometheus format — accepted by a huge range of integrations — and replicate the metrics in other software.

Lastly, we missed a backup administration interface (scheduling, requests on demand, deleting old backups etc). Backup settings go through ticket systems and, of course, interactions with customer support.

First Question: is it Feasible?

From a technical point of view, the first step of our journey was to assess whether ScyllaDB was viable in terms of functionality, performance and whether the data migration would fit within our constraints of time and effort.

Functional Validation

We brought up a container with the “target” version of ScyllaDB (Enterprise 2020.1.4) and ran our migrations (yes, we use migrations in Cassandra!) and voilá!! Our database migrated to ScyllaDB without changing a single line.

Disclaimer: It may not always be like this. ScyllaDB keeps a compatibility information page that is worth visiting to avoid surprises. [Editor’s note: also read this recent article on Cassandra and ScyllaDB: Similarities and Differences.]

Our functional validation came down to running all our complete set of tests for any that previously used Cassandra– but pointing to the dockerized version of ScyllaDB instead of Cassandra.

In most cases, we didn’t have any problems. However, one of the tests returned:

partition key Cartesian product size 4735 is greater than maximum 100The query in question is a SELECT with the IN clause. This is a use not advised by Cassandra, and that ScyllaDB decided to restrict more aggressively: the amount of values --inside the IN clause is limited by some configurations.

We changed the configuration according to our use case, the test passed, and we moved on.

Performance Validation

We instantiated a Cassandra and a ScyllaDB with their “production” settings. We also populated some tables with the help of dsbulk and ran some stress testing.

The tests were basically pre-existing read/write scenarios on our platform using cassandra-stress.

Before testing, we switched from Cassandra’s size-tiered compaction strategy to ScyllaDB’s new incremental compaction to minimize space amplification. This was something that ScyllaDB recommended. Note that this compaction strategy only exists within the enterprise version.

ScyllaDB delivered surprising numbers, decreasing query latencies by 30% to 40% even with half the hardware (in terms of CPU).

This test proved to be insufficient because the production environment is much more complex than we were able to simulate. We faced some mishaps during the migration worthy of another post, but nothing that hasn’t already been offset by the performance benefits that ScyllaDB has shown after proper corrections.

Exploring the Data Migration Process

It was important to know how and how soon we would be able to have ScyllaDB with all the data from good old Cassandra.

The result of this task was a guide for us to be able to put together a migration roadmap, in addition to influencing the decision on the adopted strategy.

We compared two alternatives of “migrators”: dsbulk and the scylla-migrator.

- dsbulk: A CLI application that performs the Cassandra data unload operation in some intermediate format, which can be used for a load operation later. It’s a process in a JVM, so it only scales vertically.

- scylla-migrator: A Spark job created and maintained by ScyllaDB with various performance/parallelism settings to massively copy data. Like any Spark job, it can be configured with a virtually infinite number of clusters. It implements a savepoints mechanism, allowing process restart from the last successfully copied batch in case of failure.

The option of copying data files from Cassandra to ScyllaDB was scrapped upon the recommendation from our contacts at ScyllaDB. It is important to have ScyllaDB reorganize data on disk as its partitioning system is different (done by CPU, not by node).

In preliminary tests, already using Cassandra and ScyllaDB in production, we got around three to four Gbytes of data migrated per hour using dsbulk, and around 30 Gbytes per hour via scylla-migrator. Obviously these results are affected by a number of factors, but they gave us an idea of --the potential uses of each tool.

To try to measure the maximum migration time, we ran the scylla-migrator on our largest table (840G) and got about 10 GBytes per hour, or about 8 days of migration 24/7.

With all these results in hand, we decided to move forward with migration. The next question is “how?”. In the next part of this series we’re going to make the toughest decision in migrations of this type: downtime tracking/prediction.

See you soon!

GET STARTED WITH SCYLLADB CLOUD